Machine Learning in Production: From Models to Products

by Christian Kästner, Carnegie Mellon University

What does it take to build software products with machine learning, not just models and demos? We assume that you can train a model or build prompts to make predictions, but what does it take to turn the model into a product and actually deploy it, have confidence in its quality, and successfully operate and maintain it at scale? This book explores designing, building, testing, deploying, and operating software products with machine-learned models. It covers the entire lifecycle from a prototype ML model to an entire system deployed in production. Covers also responsible AI (safety, security, fairness, explainability) and MLOps.

Published April 8, 2025 by MIT Press: Official MIT Press Page. All author royalties from the book are donated to Evidence Action.

The book corresponds to the CMU course 17-645 Machine Learning in Production (crosslisted as 11-695 AI Engineering) with publicly available slides and assignments. See also our annotated bibliography on the topic.

The book is released under a creative commons license: Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License.

Table of Content

Summary

Setting the Stage

Requirements Engineering

- When to use Machine Learning

- Setting and Measuring Goals

- Gathering Requirements

- Planning for Mistakes

Architecture and Design

- Thinking like a Software Architect

- Quality Attributes of ML Components

- Deploying a Model

- Automating the Pipeline

- Scaling the System

- Planning for Operations

Quality Assurance

- Quality Assurance Basics

- Model Quality

- Data Quality

- Pipeline Quality

- System Quality

- Testing and Experimenting in Production

Process and Teams

Responsible ML Engineering

Introduction

Machine learning (ML) has enabled incredible advances in the capabilities of software products, allowing us to design systems that would have seemed like science fiction only one or two decades ago, such as personal assistants answering voice prompts, medical diagnoses outperforming specialists, autonomous delivery drones, and tools creatively manipulating photos and video with natural language instructions. Machine learning also has enabled useful features in existing applications, such as suggesting personalized playlists for video and music sites, generating meeting notes for video conferences, and identifying animals in camera traps. Machine learning is an incredibly popular and active field, both in research and actual practice.

Yet, building and deploying products that use machine learning is incredibly challenging. Consultants report that 87 percent of machine-learning projects fail and 53 percent do not make it from prototype to production. Building an accurate model with machine-learning techniques is already difficult, but building a product and a business requires also collecting the right data, building an entire software product around the model, protecting users from harm caused by model mistakes, and successfully deploying, scaling, and operating the product with the machine-learned models. Pulling this off successfully requires a wide range of skills, including data science and statistics, but also domain expertise and data management skills, software engineering capabilities, business skills, and often also knowledge about user experience design, security, safety, and ethics.

In this book, we focus on the engineering aspects of building and deploying software products with machine-learning components, from using simple decision trees trained on some examples in a notebook, to deep neural networks trained with sophisticated automated pipelines, to prompting large language models. We discuss the new challenges machine learning introduces for software projects and how to address them. We explore how data scientists and software engineers can better work together and better understand each other to build not only prototype models but production-ready systems. We adopt an engineering mindset: building systems that are usable, reliable, scalable, responsible, safe, secure, and so forth—but also doing so while navigating trade-offs, uncertainty, time pressure, budget constraints, and incomplete information. We extensively discuss how to responsibly engineer products that protect users from harm, including safety, security, fairness, and accountability issues.

Motivating Example: An Automated Transcription Start-up

Let us illustrate the challenges of building a product with machine-learning components with an illustrative scenario, set a few years ago when deep neural networks first started to dominate speech recognition technology.

Assume that, as a data scientist, Sidney has spent the last couple of years at a university pushing the state of the art in speech recognition technology. Specifically, the research was focused on making it easy to specialize speech recognition for specific domains to detect technical terminology and jargon in that domain. The key idea was to train neural networks for speech recognition with lots of data (e.g., PBS transcripts) and combine this with transfer learning on very small annotated domain-specific datasets from experts. Sidney has demonstrated the feasibility of the idea by showing how the models can achieve impressive accuracy for transcribing doctor-patient conversations, transcribing academic conference talks on poverty and inequality research, and subtitling talks at local Ruby programming meetups. Sidney managed to publish the research insights at high-profile academic machine-learning conferences.

During this research, Sidney talked with friends in other university departments who frequently conduct interviews for their research. They often need to transcribe recorded interviews (say forty interviews, each forty to ninety minutes long) for further analysis, and they are frustrated with current transcription services. Most researchers at the time used transcription services that employed other humans to transcribe the audio recordings (e.g., by hiring crowdsourced workers on Amazon’s Mechanical Turk in the back end), usually priced at about $1.50 per minute and with a processing time of several days. At this time, a few services for machine-generated transcriptions and subtitles existed, such as YouTube’s automated subtitles, but their quality was not great, especially when it came to technical vocabulary. Similarly, Sidney found that conference organizers were increasingly interested in providing live captions for talks to improve accessibility for conference attendees. Again, existing live-captioning solutions often either had humans in the loop and were expensive or they produced low-quality transcripts.

Seeing the demand for automated transcriptions of interviews and live captioning, and having achieved good results in academic experiments, Sidney decides to try to commercialize the domain-specific speech-recognition technology by creating a start-up with some friends. The goal is to sell domain-specific transcription and captioning tools to academic researchers and conference organizers, undercutting the prices of existing services at better quality.

Sidney quickly realizes that, even though the models trained in research are a great starting point, it will be a long path toward building a business with a stable product. To build a commercially viable product, there are lots of challenges:

-

In academic papers, Sidney’s models outperformed the state-of-the-art models on accuracy measured on test data by a significant margin, but audio files received from customers are often noisier than those used for benchmarking in academic research.

-

In the research lab, it did not matter much how long it took to train the model or transcribe an audio file. Now customers get impatient if their audio files are not transcribed within 15 minutes. Worse, live captioning needs to be essentially instantaneous. Making model inference faster and better scalable to process many transcriptions suddenly becomes an important focus for the start-up. Live captioning turns out to be unrealistic after all unless expensive specialized hardware is shipped to the conference venue to achieve latency acceptable in real-time settings.

-

The start-up wants to undercut the market significantly with transcriptions at very low prices. However, both training and inference (i.e., actually transcribing an audio file with the model) are computationally expensive, so the amount of money paid to a cloud service provider substantially eats into the profit margins. It takes a lot of experimentation to figure out a reasonable and competitive price that customers are willing to pay and still make a profit.

-

Attempts at using new large language models to improve transcripts and adding new features, like automated summaries, run quickly into excessive costs paid to companies providing the model APIs. Attempts to self-host open-source large language models were frustratingly difficult due to brittle and poorly maintained libraries and ended up needing most of the few high-end GPUs the start-up was able to buy, which were all desperately needed already for other training and inference tasks.

-

While previously fully focused on data-science research, the team now needs to build a website where users can upload audio files and see results—with which the team members have no experience and which they do not enjoy. The user experience makes it clear that the website was an afterthought—it is tedious to use and looks dated. The team now also needs to deal with payment providers to accept credit card payments. Realizing in the morning that the website has been down all night or that some audio files have been stuck in a processing queue for days is no fun. They know that they should make sure that customer data is stored securely and privately, but they have little experience with how to do this properly, and it is not a priority right now. Hiring a front-end web developer has helped make the site look better and easier to change, but communication between the founders and the newly hired engineers turns out to be much more challenging than anticipated. Their educational backgrounds are widely different and they have a hard time communicating effectively to ensure that the model, back end, and user interface work well together as an integrated product.

-

The models were previously trained with many manual steps and a collection of scripts. Now, every time the model architecture is improved or a new domain is added, someone needs to spend a lot of time re-training the models and dealing with problems, restarting jobs, tuning hyperparameters, and so forth. Nobody has updated the Tensorflow library in almost a year, out of fear that something might break. Last week, a model update went spectacularly wrong, causing a major outage and a long night of trying to revert to a previous version, which then required manually re-running a lot of transcription jobs for affected customers.

-

After a rough start, customer feedback is now mostly positive, and more customers are signing up, but some customers are constantly unhappy and some report some pretty egregious mistakes. One customer sent a complaint with several examples of medical diagnoses incorrectly transcribed with high confidence, and another wrote a blog post about how the transcriptions for speakers with African American vernacular at their conference are barely intelligible. Several team members spend most of their time chasing problems, but debugging remains challenging and every fixed problem surfaces three new ones. Unfortunately, unless a customer complains, the team has no visibility into how the model is really doing. They also only now start collecting basic statistics about whether customers return.

Most of these challenges are probably not very surprising and most are not unique to projects using machine learning. Still, this example illustrates how going from an academic prototype showing the feasibility of a model to a product operating in the real world is far from trivial and requires substantial engineering skills.

For the transcription service, the machine-learned model is clearly the essential core of the entire product. Yet, this example illustrates how, when building a production-ready system, there are many more concerns beyond training an accurate model. Even though many non-ML components are fairly standard, such as the website for uploading audio files, showing transcripts, and accepting payment, as well as the back end for queuing and executing transcriptions, they nonetheless involve substantial engineering effort and additional expertise, far beyond the already very specialized data-science skills to build the model in the first place.

This book will systematically cover engineering aspects of building products with machine-learning components, such as this transcription service. We will argue for a broad view that considers the entire system, not just the machine-learning components. We will cover requirements analysis, design and architecture, quality assurance, and operations, but also how to integrate work on many different artifacts, how to coordinate team members with different backgrounds in a planned process, and how to ensure responsible engineering practices that do not simply ignore fairness, safety, and security. With some engineering discipline, many of the challenges discussed previously can be anticipated; with some up-front investment in planning, design, and automation many problems can be avoided down the road.

Data Scientists and Software Engineers

Building production systems with machine-learning components requires many forms of expertise. Among others,

-

We need business skills to identify the problem and build a company.

-

We need domain expertise to understand the data and frame the goals for the machine-learning task.

-

We need the statistics and data science skills to identify a suitable machine-learning algorithm and model architecture.

-

We need the software engineering skills to build a system that integrates the model as one of its many components.

-

We need user-interface design skills to understand and plan how humans will interact with the system (and its mistakes).

-

We may need system operations skills to handle system deployment, scaling, and monitoring.

-

We may want help from data engineers to extract, move and prepare data at scale.

-

We may benefit from experience with prompting large language models and other foundation models, following discoveries and trends in this fast moving space.

-

We may need legal expertise from lawyers who check for compliance with regulations and develop contracts with customers.

-

We may need specialized safety and security expertise to ensure the system does not cause harm to the users and environment and does not disclose sensitive information.

-

We may want social science skills to study how our system could affect society at large.

-

We need project management skills to hold the whole team together and keep it focused on delivering a product.

To keep a manageable scope for this book, we particularly focus on the roles of developers—specifically data scientists and software engineers working together to develop the core technical components of the system. We will touch on other roles occasionally, especially when it comes to deployment, human-AI interaction, project management, and responsible engineering, but generally we will focus on these two roles.

The central theme of this book: how to get data scientists and software engineers to each contribute their distinct expertise while effectively working together.

Data scientists and software engineers tend to have quite different skills and educational backgrounds, which are both needed for building products with machine-learning components.

Data scientists tend to have an educational background (often even a PhD degree) in statistics and machine-learning algorithms. They usually prefer to focus on building models (e.g., feature engineering, model architecture, hyperparameter tuning) but also spend a lot of time on gathering and cleaning data. They use a science-like exploratory workflow, often in computational notebooks like Jupyter. They tend to evaluate their work in terms of accuracy on held-out test data, and maybe start investigating fairness or robustness of models, but tend to rarely focus on other qualities such as inference latency or training cost. A typical data-science course either focuses on how machine-learning algorithms work or on applying machine-learning algorithms to develop models for a clearly defined task with a provided dataset.

In contrast, software engineers tend to focus on delivering software products that meet the user’s needs, ideally within a given budget and time. This may involve steps like understanding the user’s requirements; designing the architecture of a system; implementing, testing, and deploying it at scale; and maintaining and improving it over time. Software engineers often work with uncertainty and budget constraints, and they constantly apply engineering judgment to navigate trade-offs between various qualities, including usability, scalability, maintainability, security, development time, and cost. A typical software-engineering curriculum covers requirements engineering, software design, and quality assurance (e.g., testing, test automation, static analysis), but also topics like distributed systems and security engineering, often ending with a capstone project to work on a product for a customer.

The described distinctions between data scientists and software engineers are certainly oversimplified and overgeneralized, but they characterize many of the differences we observe in practice. It is not that one group is superior to the other, but they have different, complementary expertise and educational backgrounds that are both needed to build products with machine-learning components. For our transcription scenario, we will need data scientists to build the transcription models that form the core of the application, but also software engineers to build and maintain a product around the model.

Some developers may have a broad range of skills that include both data science and software engineering. These types of people are often called “unicorns,” since they are rare or even considered mythical. In practice, most people specialize in one area of expertise. Indeed, many data scientists report that they prefer modeling but do not enjoy infrastructure work, automation, and building products. In contrast, many software engineers have developed an interest in machine learning, but research has shown that without formal training, they tend to approach machine learning rather naively with little focus on feature engineering, they rarely test models for generalization, and they think of more data and deep learning as the only next steps when stuck with low-accuracy models.

In practice, we need to bring people with different expertise who specialize in different aspects of the system together in interdisciplinary teams. However, to make those teams work, team members from different backgrounds need to be able to understand each other and appreciate each other's skills and concerns. This is a central approach of this book: rather than comprehensively teaching software engineering skills to data scientists or comprehensively teaching data science skills to software engineers, we will provide a broad overview of all the concerns that go into building products, involving both data science and software engineering parts. We hope this will provide sufficient context that data scientists and software engineers appreciate each other’s contributions and work together, thus educating T-shaped team members. We will return to many of these ideas in the chapter Interdisciplinary Teams.

Characterizing team members based on depth of expertise (vertically) and breadth of expertise (horizontally): T-Shaped team members combine deep expertise in one topic with broad knowledge of others. They are ideal members in interdisciplinary teams, since they can effectively understand and collaborate with others.

Machine-Learning Challenges in Software Projects

There is still an ongoing debate within the software-engineering community on whether machine learning fundamentally changes how we engineer software systems or whether we essentially just need to rigorously apply existing engineering practices that have long been taught to aspiring software engineers.

Let us look at three challenges introduced by machine learning, which we will explore in much more detail in later chapters of this book.

Lack of Specifications

In traditional software engineering, abstraction, reuse, and composition are key strategies that allow us to decompose systems, work on components in parallel, test parts in isolation, and put the pieces together for the final system. However, a key requirement for such decomposition is that we can come up with a specification of what each component is supposed to do, so that we can divide the work and separately test each component against its specification and rely on other components without having to know all their implementation details. Specifications also allow us to work with opaque components where we do not have access to the source in the first place or do not understand the implementation.

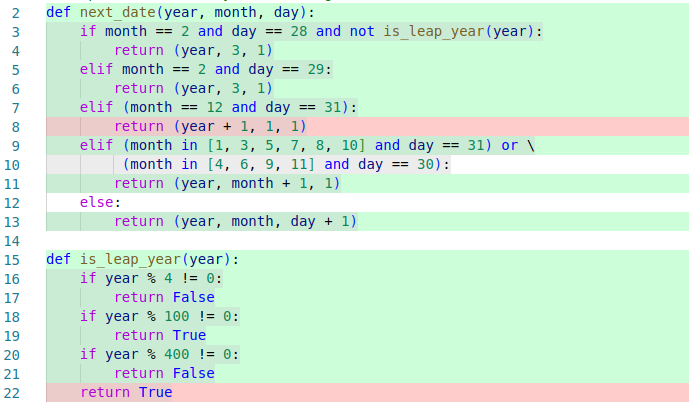

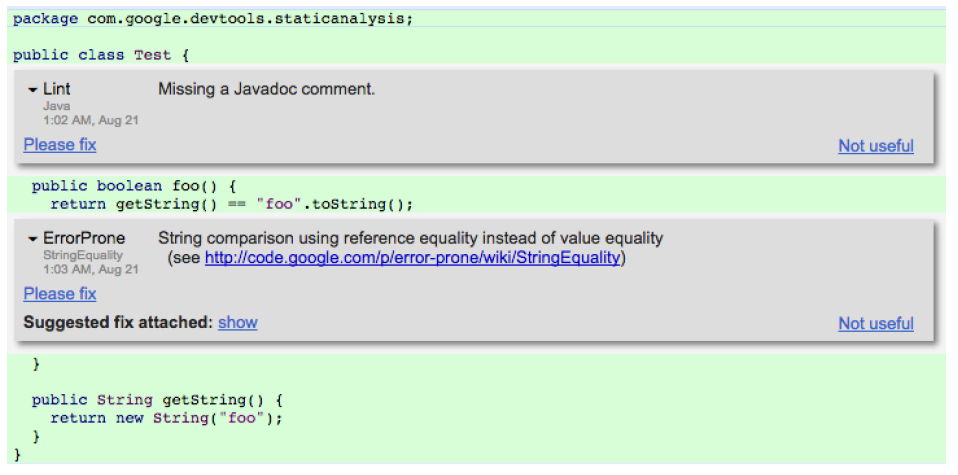

def compute_deductions(agi, expenses):

"""

Compute deductions based on provided adjusted gross income

and expenses in customer data.

See tax code 26 U.S. Code A.1.B, PART VI.

Adjusted gross income must be a positive value.

Returns computed deduction value.

"""

Example of a textual specification for a traditional software function as common in software projects, describing what the function does and how to compute the results (pointing to another document for details in this case). A developer can implement this function according to the specification, without needing to understand the rest of the system. Another developer working on another part of the system can rely on this function without having access to its implementation.

With machine learning, we have a hard time coming up with good specifications. We can generally describe the task, but not how to do it, or what the precise expected mapping between inputs and outputs is—it is less obvious how such a description could be used for testing or to provide reliable contracts to the clients of the function. With foundation models like GPT-4, we even just provide natural language prompts and hope the model understands our intention. We use machine learning precisely because we do not know how to specify and implement specific functions.

def transcribe(audio_file):

"""

Return the text spoken within the audio file.

????

"""

Example of a possible inference function for a model, which is difficult to specify. The documentation indicates the purpose of the function, but it is less obvious when an implementation would be considered “correct” or “good enough.” Whether a learned model is good enough depends on how and for what it is used in the system.

Machine learning introduces a fundamental shift from deductive reasoning (mathy, logic-based, applying logic rules) to inductive reasoning (sciency, generalizing from observation). As we will discuss at length in the chapter Model Quality, we can no longer say whether a component is correct, because we do not have a specification of what it means to be correct, but we evaluate whether it works well enough (on average) on some test data or in the context of a concrete system. We actually do not expect a perfect answer from a machine-learned model for every input, which also means our system must be able to tolerate some incorrect answers, which influences the way we design the rest of the system and the way we validate the systems as a whole.

While this shift seems drastic, software engineering has a long history of building safe systems with unreliable components—machine-learned models may be just one more type of unreliable component. In practice, comprehensive and formal specifications of software components are rare. Instead, engineers routinely work with missing, incomplete, and vague textual specifications, compensating with agile methods, with communication across teams, and with lots of testing. Machine learning may push us further down this route than what was needed in many traditional software projects, but we already have engineering practices for dealing with the challenges of missing or vague specifications. We will focus on these issues throughout the book, especially in the quality assurance chapters.

Interacting with the Real World

Most software products, including those with machine-learning components, often interact with the environment (aka “the real world”). For example, shopping recommendations influence how people behave, autonomous trains operate tons of steel at high speeds through physical environments, and our transcription example may influence what medical diagnoses are recorded with potentially life-threatening consequences from wrong transcriptions. Such systems often raise safety concerns: when things go wrong, we may physically harm people or the environment, cause stress and anxiety, or create society-scale disruptions.

Machine-learned models are often trained on data that comes from the environment, such as voice recordings from TV shows that were manually subtitled by humans. If the observation of the environment is skewed or observed actions were biased in the first place, we are prone to run into fairness issues, such as transcription models that struggle with certain dialects or poorly transcribe medical conversations about diseases affecting only women. Furthermore, data we observe from the environment may have been influenced by prior predictions from machine-learning models, resulting in potential feedback loops. For example, YouTube used to recommend conspiracy-theory videos much more than other videos, because its models realized that people who watch these types of videos tend to watch them a lot; by recommending these videos more frequently, YouTube could keep people longer on the platform, thus making people watch even more of these conspiracy videos and making the predictions even stronger in future versions of the model. YouTube eventually fixed this issue not with better machine learning but by hard-coding rules around the machine-learned model.

As a system with machine-learning components influences the world, users may adapt their behavior in response, sometimes in unexpected ways, changing the nature of the very objects that the system was designed to model and predict in the first place. For example, conference speakers could modify their pronunciation to avoid common mistranscriptions by our transcription service. Through adversarial attacks, users may identify how models operate and try to game the system with specifically crafted inputs, for example, tricking face recognition algorithms with custom glasses. User behavior may shift over time, intentionally or naturally, resulting in drift in data distributions.

Yet, software systems have always interacted with the real world. Software without machine learning has caused harm, such as delivering radiation overdoses or crashing planes and crashing spaceships. To prevent such issues, software engineers focus on requirements engineering, hazard analysis, and threat modeling—to understand how the system interacts with the environment, to anticipate problems and analyze risks, and to design safety and security mechanisms into the system. The use of machine learning may make this analysis more difficult because we are introducing more components that we do not fully understand and that are based on data that may not be neutral or representative. These additional difficulties make it even more important to take requirements engineering seriously in projects with machine-learning components. We will focus on these issues extensively in the requirements engineering and responsible engineering chapters.

Data Focused and Scalable

Machine learning is often used to train models on massive amounts of data that do not fit on a single machine. Systems with machine-learning components often benefit from scale through the machine-learning flywheel effect: with more users, the system can collect data from those users and use that data to train better models, which again may attract more users. To operate at scale, models are often deployed using distributed computing, on devices, in data centers, or with cloud infrastructure.

The machine-learning flywheel.

Increasingly large models, including large language models and other foundation models, require expensive high-end hardware even just for making predictions, causing substantial operating challenges and cost and de facto enforcing that models are not only trained but also deployed on dedicated machines and accessed remotely.

That is, when using machine learning, we may put much more emphasis on operating with huge amounts of data and expensive computations at a massive scale, demanding substantial hardware and software infrastructure and causing substantial complexity for data management, deployment, and operation. This may require many additional skills and may require close collaboration with operators.

Yet, data management and scalability are not entirely new challenges either. Systems without machine-learning components have been operated in the cloud for well over a decade and have managed large amounts of data with data warehouses, batch processing, and stream processing. However, the demands and complexity for an average system with machine-learning components may well be higher than the demands for a typical software system without machine learning. We will discuss the design and operation of scalable systems primarily in the design and architecture chapters.

From Traditional Software to Machine Learning

Our conjecture in this book is that machine learning introduces many challenges to building production systems, but that there is also a vast amount of prior software engineering knowledge and experience that can be leveraged. While training models requires unique insights and skills, we argue that few challenges introduced by machine learning are uniquely new when it comes to building production systems around such models. However, importantly, the use of machine learning often introduces complexity and risks that call for more careful engineering.

Overall, we see a spectrum from low-risk to high-risk software systems. We tend to have a good handle on building simple, low-risk software systems, such as a restaurant website or a podcast hosting site. When we build systems with higher risks, such as medical records and payment software, we tend to step up our engineering practices and are more attentive to requirement engineering, risk analysis, quality assurance, and security practices. At the far end, we also know how to build complex and high-risk systems, such as control software for planes and nuclear power plants; it is just very expensive because we slow down and invest heavily in strong engineering processes and practices.

Our conjecture: more software products with machine-learning components tend to fall toward the more complex and more risky end of the spectrum of possible software systems, compared to traditional products without machine learning, calling for more investment in rigorous engineering practices.

Our conjecture is that we tend to attempt much more ambitious and risky projects with machine learning. We tend to introduce machine-learned models for challenging tasks, even when they can make mistakes. It is not that machine learning automatically makes projects riskier—and there certainly are also many low-risk systems with machine-learning components—but commonly projects use machine learning for novel and disruptive ideas at scale without being well prepared for what happens if model predictions are wrong or biased. We argue that we are less likely to get away with sloppy engineering practices in machine-learning projects, but will likely need to level up our engineering practices. We should not pretend that systems with machine-learning components, including our transcription service example, are easy and harmless projects when they are not. We need to acknowledge that they may pose risks, may be harder to design and operate responsibly, may be harder to test and monitor, and may need substantially more software and hardware infrastructure. Throughout this book, we give an overview of many of these practices that can be used to gain more confidence in even more complex and risky systems.

A Foundation for MLOps and Responsible Engineering

In many ways, this is also a book about MLOps and about responsible ML engineering (or ethical AI), but those topics are necessarily embedded in a larger context.

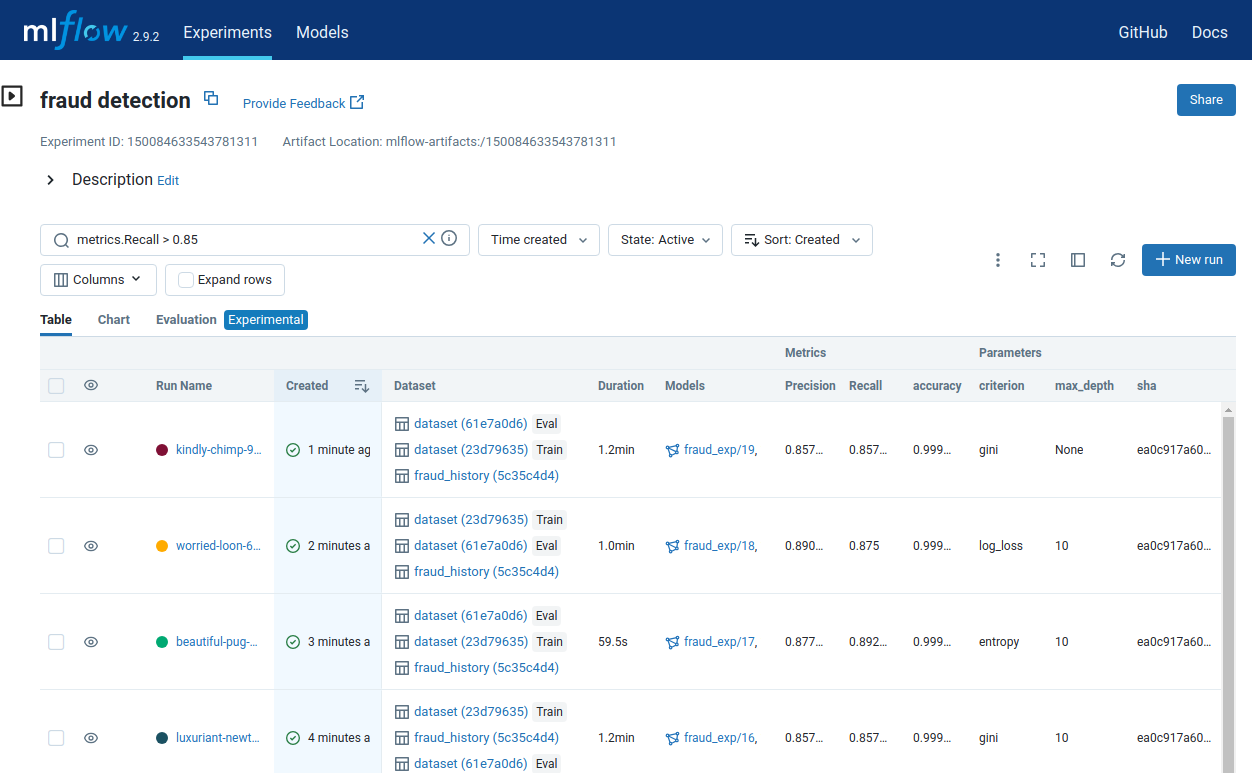

MLOps. MLOps and related concepts like DevOps and LLMOps describe efforts to automate machine-learning pipelines and make it easy and reliable to deploy, update, monitor, and operate models. MLOps is often described in terms of a vast market of tools like Kubeflow for scalable machine-learning workflows, Great Expectations for data quality testing, MLflow for experiment tracking, Evidently AI for model monitoring, and Amazon Sagemaker as an integrated end-to-end ML platform—with many tutorials, talks, blog posts, and books covering these tools.

In this book, we discuss the underlying fundamentals of MLOps and how they must be considered as part of the entire development process. However, those fundamentals are necessarily cutting across the entire book, as they touch equally on requirements challenges (e.g., identifying data and operational requirements), design challenges (e.g., automating pipelines, building model inference services, designing for big data processing), quality assurance challenges (e.g., automating model, data, and pipeline testing), safety, security, and fairness challenges (e.g., monitoring fairness measures, incidence response planning), and teamwork and process challenges (e.g., culture of collaboration, tracking technical debt). While MLOps is a constant theme throughout the book, the closest the book comes to dedicated coverage of MLOps is (a) the chapter Planning for Operations, which discusses proactive design to support deployment and monitoring and provides an overview of the MLOps tooling landscape, and (b) the chapter Interdisciplinary Teams, which discusses the defining culture of collaboration in MLOps and DevOps shaped through joint goals, joint vocabulary, and joint tools.

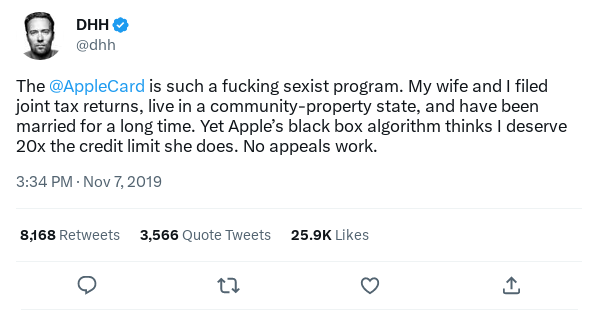

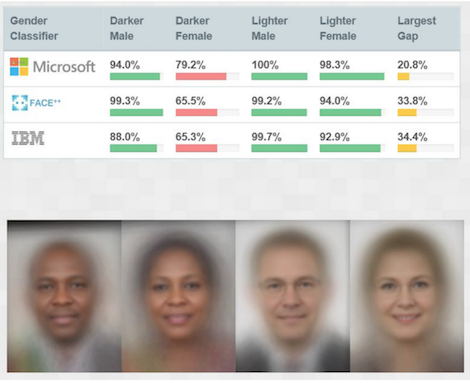

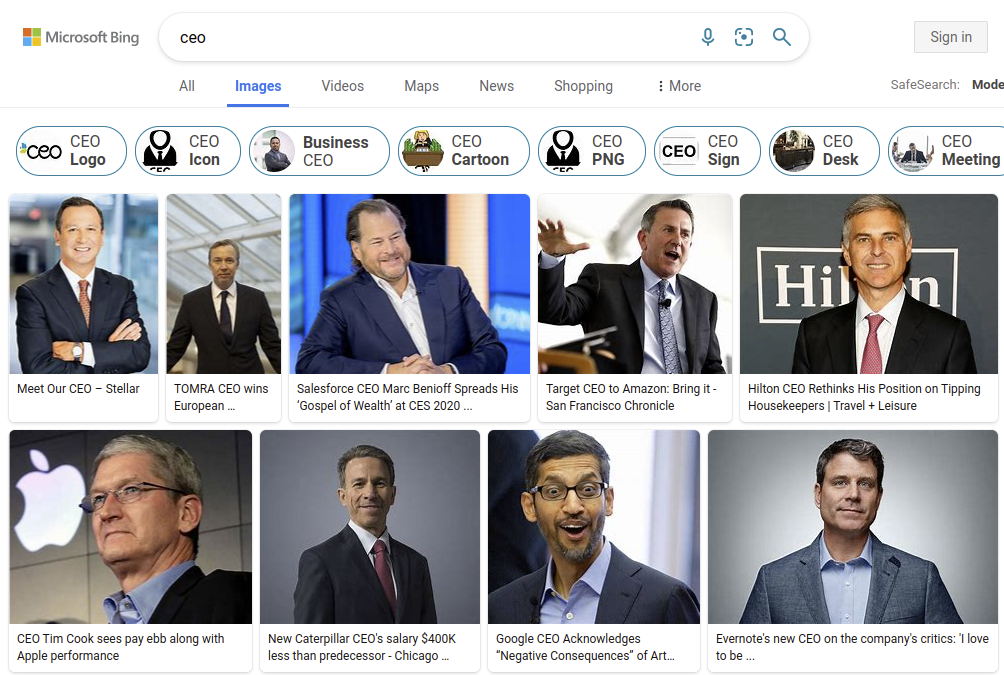

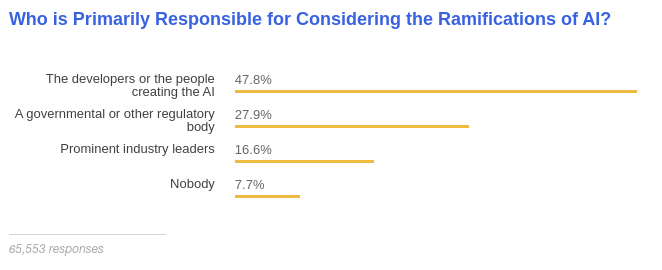

Responsible ML engineering and ethical AI. Stories of unfair machine-learning systems discriminating against female and Black users are well represented in machine-learning discourse and popular media, as are stories about unsafe autonomous vehicles, robots that can be tricked with fake photos and stickers, algorithms creating fake news and exacerbating societal-level polarization, and fears of superintelligent systems creating existential risks to human existence. Machine learning gives developers great powers but also massive opportunities for causing harm. Most of these harms are not intentional but are caused through negligence and as unintended consequences of building a complex system. Many researchers, practitioners, and policymakers explore how machine learning can be used responsibly and ethically, associated with concepts like safety, security, fairness, inclusiveness, transparency, accountability, empowerment, human rights and dignity, and peace.

This book extensively covers responsible ML engineering. But again, responsible engineering or facets like safety, security, and fairness cannot be considered in isolation or in a model-centric way. There are no magic tools that can make a model secure or ensure fairness. Instead, responsible engineering, as we will relentlessly argue, requires a holistic view of the system and its development process, understanding how a model interacts with other components within a system and how that system interacts with the environment. Responsible engineering necessarily cuts across the entire development life cycle. Responsible engineering must be deeply embedded in all development activities. While the final seven chapters of this book are explicitly dedicated to responsible engineering tools and concepts, they build on the foundations laid in the previous chapters about understanding and negotiating system requirements, reasoning about model mistakes, considering the entire system architecture, testing and monitoring all parts, and creating effective development processes with well-working teams having broad and diverse expertise. These topics are necessarily interconnected. Without such grounding and broad perspective, attempts to tackle safety, security, or fairness are often narrow, naive, and ineffective.

From decision trees to deep learning to large language models. Machine-learning innovations continue at a rapid pace. For example, in recent years, first the introduction of deep learning on image classification problems and then the introduction of the transformer architecture for natural language models shifted the prevailing machine-learning discourse and enabled new and more ambitious applications. At the time of this writing, we are well into another substantial shift with the introduction of large language models and other foundation models, changing practices away from training custom models to prompting huge general-purpose models trained by others. Surely, other disruptive innovations will follow. In each iteration, new approaches like deep learning and foundation models add tools with different capabilities and trade-offs to an engineer’s toolbox but do not entirely replace prior approaches.

Throughout this book, rather than chasing the latest tool, we focus on the underlying enduring ideas and principles—such as (1) understanding customer priorities and tolerance for mistakes, (2) designing safe systems with unreliable components, (3) navigating conflicting qualities like accuracy, operating cost, latency, and time to release, (4) planning a responsible testing strategy, and (5) designing systems that can be updated rapidly and monitored in production. These and many other ideas and principles are deeply grounded in a long history of software engineering and remain important throughout technological advances on the machine-learning side. For example, as we will discuss, large language models substantially shift trade-offs and costs in system architectures and raise new safety and security concerns, such as generating propaganda and prompt injection attacks—this triggered lots of new research and tooling, but insights about hazard analysis, architectural reasoning about trade-offs, distributed systems, automation, model testing, and threat modeling that are foundational to MLOps and responsible engineering remain just as important.

This book leans into the interconnected, interdisciplinary, and holistic nature of building complex software products with machine-learning components. While we discuss recent innovations and challenges and point to many state-of-the-art tools, we also try to step back and discuss the underlying big challenges and big ideas and how they all fit together.

Summary

Machine learning has enabled many great and novel product ideas and features. With attention focused on innovations in machine-learning algorithms and models, the engineering challenges of transitioning from a model prototype to a production-ready system are often underestimated. When building products that could be deployed in production, a machine-learned model is only one of many components, though often an important or central one. Many challenges arise from building a system around a model, including building the right system (requirements), building it in a scalable and robust way (architecture), ensuring that it can cope with mistakes made by the model (requirements, user-interface design, quality assurance), and ensuring that it can be updated and monitored in production (operations).

Building products with machine-learning components requires a truly interdisciplinary effort covering a wide range of expertise, often beyond the capabilities of a single person. It really requires data scientists, software engineers, and others to work together, understand each other, and communicate effectively. This book hopes to help facilitate a better understanding.

Finally, machine learning may introduce characteristics that are different from many traditional software engineering projects, for example, through the lack of specifications, interactions with the real world, or data-focused and scalable designs. Machine learning often introduces additional complexity and possibly additional risks, that call for responsible engineering practices. Whether we need entirely new practices, need to tailor established practices, or just need more of the same is still an open debate—but most projects can clearly benefit from more engineering discipline.

The rest of this book will dive into many of these topics in much more depth, including requirements, architecture, quality assurance, operations, teamwork, and process. This book extensively covers MLOps and responsible ML engineering, but those topics necessarily cut across many chapters.

Further Readings

-

An excellent book that discusses many engineering challenges for building software products with machine-learning components based on a decade of experience in big-tech companies, which provided much of the inspiration for our undertaking: 🕮 Hulten, Geoff. Building Intelligent Systems: A Guide to Machine Learning Engineering. Apress. 2018.

-

There are many books that provide an excellent introduction to machine learning and data science. As a practical introduction, we recommend 🕮 Géron, Aurélien. Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow. 3nd Edition, O'Reilly, 2022.

-

An excellent book discussing the business aspects of machine learning: 🕮 Ajay Agrawal, Joshua Gans, Avi Goldfarb. Prediction Machines: The Simple Economics of Artificial Intelligence. Harvard Business Review Press, 2018.

-

Recent articles discussing whether and to what degree machine learning actually introduces new or harder software engineering challenges: 🗎 Ozkaya, Ipek. “What Is Really Different in Engineering AI-Enabled Systems?” IEEE Software 37, no. 4 (2020): 3–6. 🗎 Shaw, Mary, and Liming Zhu. “Can Software Engineering Harness the Benefits of Advanced AI?” IEEE Software 39, no. 6 (2022): 99–104.

-

In-depth case studies of specific production systems with machine-learning components that highlight various engineering challenges beyond just training the models: 🗎 Passi, Samir, and Phoebe Sengers. “Making Data Science Systems Work.” Big Data & Society 7, no. 2 (2020). 🗎 Sculley, D., Matthew Eric Otey, Michael Pohl, Bridget Spitznagel, John Hainsworth, and Yunkai Zhou. 2011. “Detecting Adversarial Advertisements in the Wild.” Proceedings of the International Conference on Knowledge Discovery and Data Mining. 🗎 Sendak, Mark P., William Ratliff, Dina Sarro, Elizabeth Alderton, Joseph Futoma, Michael Gao, Marshall Nichols et al. “Real-World Integration of a Sepsis Deep Learning Technology into Routine Clinical Care: Implementation Study.” JMIR Medical Informatics 8, no. 7 (2020): e15182.

-

A study of software engineering challenges in machine-learning projects at Microsoft: 🗎 Amershi, Saleema, Andrew Begel, Christian Bird, Robert DeLine, Harald Gall, Ece Kamar, Nachiappan Nagappan, Besmira Nushi, and Thomas Zimmermann. “Software Engineering for Machine Learning: A Case Study.” In International Conference on Software Engineering: Software Engineering in Practice (ICSE-SEIP), pp. 291–300. IEEE, 2019.

-

A study highlighting the challenges in building products with machine-learning components and how many of them relate to poor engineering practices and poor coordination between software engineers and data scientists: 🗎 Nahar, Nadia, Shurui Zhou, Grace Lewis, and Christian Kästner. “Collaboration Challenges in Building ML-Enabled Systems: Communication, Documentation, Engineering, and Process.” Proc. International Conference on Software Engineering, 2022.

-

A study of software engineering challenges in deep learning projects: 🗎 Arpteg, Anders, Björn Brinne, Luka Crnkovic-Friis, and Jan Bosch. “Software Engineering Challenges of Deep Learning.” In Euromicro Conference on Software Engineering and Advanced Applications (SEAA), pp. 50–59. IEEE, 2018.

-

A study of how people without data science training (mostly software engineers) build models: 🗎 Yang, Qian, Jina Suh, Nan-Chen Chen, and Gonzalo Ramos. “Grounding Interactive Machine Learning Tool Design in How Non-experts Actually Build Models.” In Proceedings of the Designing Interactive Systems Conference, pp. 573–584. 2018.

-

An interesting study of engineering challenges when it comes to building machine-learning products in everyday companies outside of Big Tech: 🗎 Hopkins, Aspen, and Serena Booth. “Machine Learning Practices Outside Big Tech: How Resource Constraints Challenge Responsible Development.” In Proceedings of the Conference on AI, Ethics, and Society, 2021.

-

Attempts to quantify how commonly machine-learning projects fail: 🔗 VenureBeat. “Why Do 87% of Data Science Projects Never Make It into Production?” 2019. https://venturebeat.com/ai/why-do-87-of-data-science-projects-never-make-it-into-production/. 🔗 Gartner on AI Engineering: https://www.gartner.com/en/newsroom/press-releases/2020-10-19-gartner-identifies-the-top-strategic-technology-trends-for-2021.

-

An example of an evasion attack on a face recognition model with specifically crafted glasses: 🗎 Sharif, Mahmood, Sruti Bhagavatula, Lujo Bauer, and Michael K. Reiter. “Accessorize to a Crime: Real and Stealthy Attacks on State-of-the-Art Face Recognition.” In Proceedings of the Conference on Computer and Communications Security, pp. 1528–1540. 2016.

-

Examples of software disasters that did not need machine learning, often caused by problems when the software interacts with the environment: 🗎 Leveson, Nancy G., and Clark S. Turner. “An Investigation of the Therac-25 Accidents.” Computer 26, no. 7 (1993): 18–41. 🔗 Software bugs with significant consequences: https://en.wikipedia.org/wiki/List_of_software_bugs.

As all chapters, this text is released under Creative Commons BY-NC-ND 4.0 license. Last updated on 2024-06-13.

From Models to Systems

In software products, machine learning is almost always used as a component in a larger system—often a very important component, but usually still just one among many components. Yet, most education and research on machine learning has an entirely model-centric view, focusing narrowly on the learning algorithms and building models, but rarely considering how the model would actually be used for a practical goal.

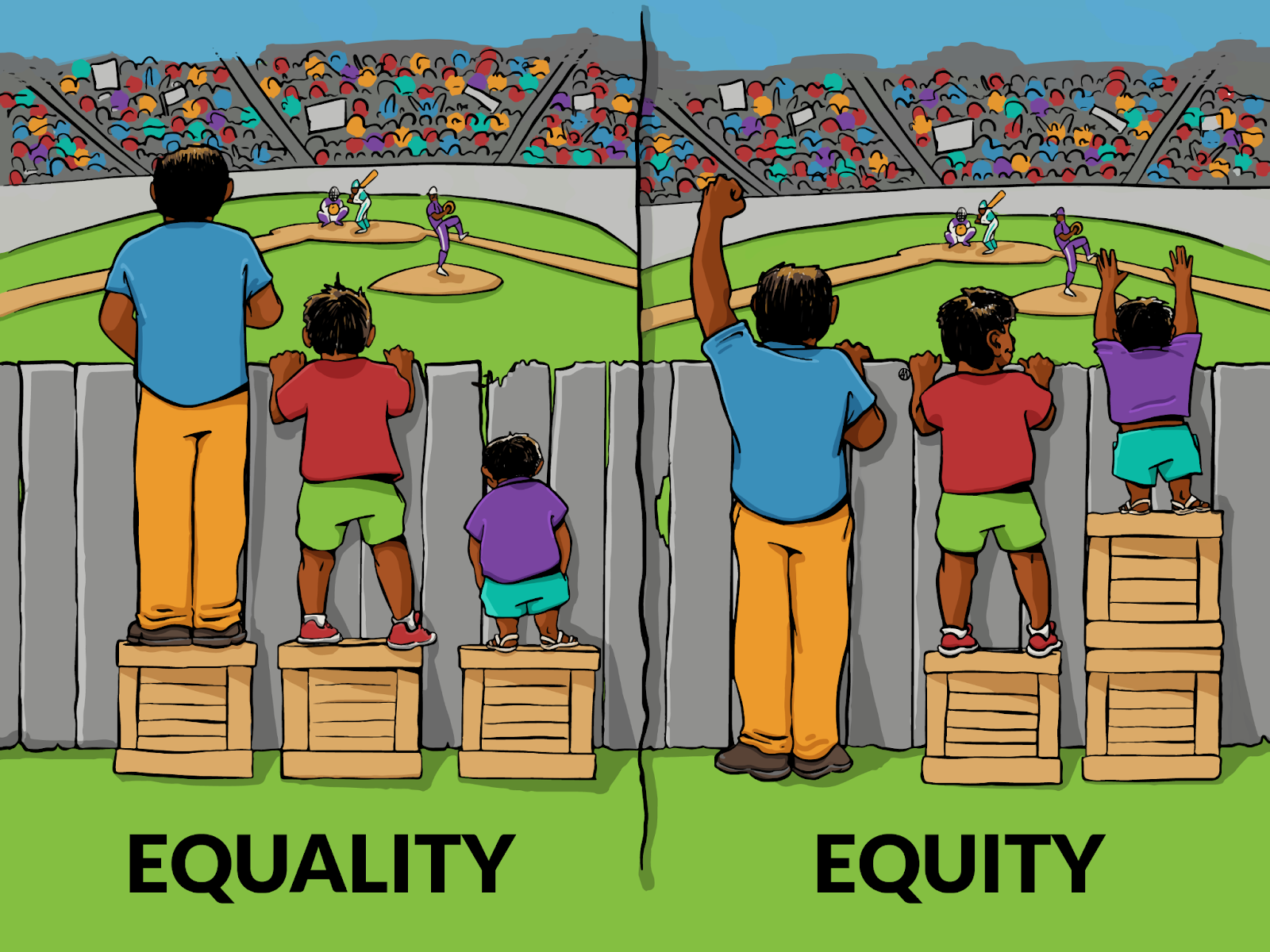

Adopting a system-wide view is important in many ways. We need to understand the goal of the system and how the model supports that goal but also introduces risks. Key design decisions require an understanding of the full system and its environment, such as whether to act autonomously based on predictions or whether and when to check those predictions with non-ML code, with other models, or with humans in the loop. Such decisions matter substantially for how a system can cope with mistakes and has implications for usability, safety, and fairness. Before the rest of the book looks at various facets of building software systems with ML components, we dive a little deeper into how machine learning relates to the rest of the system and why a system-level view is so important.

ML and Non-ML Components in a System

In production systems, machine learning is used to train models to make predictions that are used in the system. In some systems, those predictions are the very core of the system, whereas in others they provide only an auxiliary feature.

In the transcription service start-up from the previous chapter, machine learning provides the very core functionality of the system that converts uploaded audio files into text. Yet, to turn the model into a product, many other (usually non-ML) parts are needed, such as (a) a user interface to create user accounts, upload audio files, and show results, (b) a data storage and processing infrastructure to queue and store transcriptions and process them at scale, (c) a payment service, and (d) monitoring infrastructure to ensure the system is operating within expected parameters.

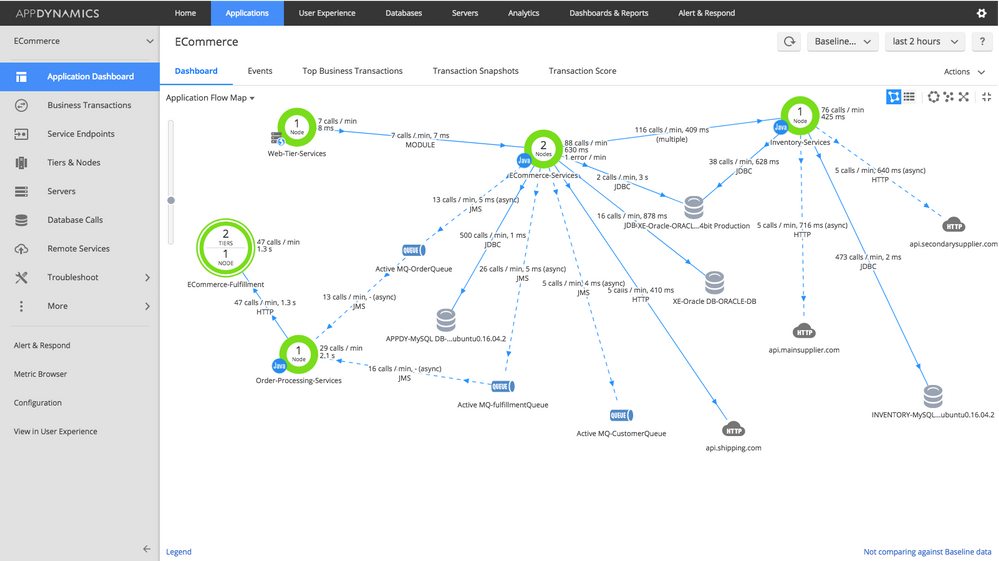

An architecture sketch of a transcription system, illustrating the central ML component for speech recognition and many non-ML components.

At the same time, many traditional software systems use machine learning for some extra “add-on” functionality. For example, a traditional end-user tax software product may add a module to predict the audit risk for a specific customer, a word processor may add a better grammar checker, a graphics program may add smart filters, and a photo album application may automatically tag friends. In all these cases, machine learning is added as an often relatively small component to provide some added value to an existing system.

An architecture sketch of the tax system, illustrating the ML component for audit risk as an addition to many non-ML components in the system.

Traditional Model Focus (Data Science)

Much of the attention in machine-learning education and research has been on learning accurate models from given data. Machine-learning education typically focuses either on how specific machine-learning algorithms work (e.g., the internals of SVM, deep neural networks, or transformer architectures) or how to use them to train accurate models from provided data. Similarly, machine-learning research focuses primarily on the learning steps, trying to improve the prediction accuracy of models trained on benchmark datasets (e.g., new deep neural network architectures, new embeddings).

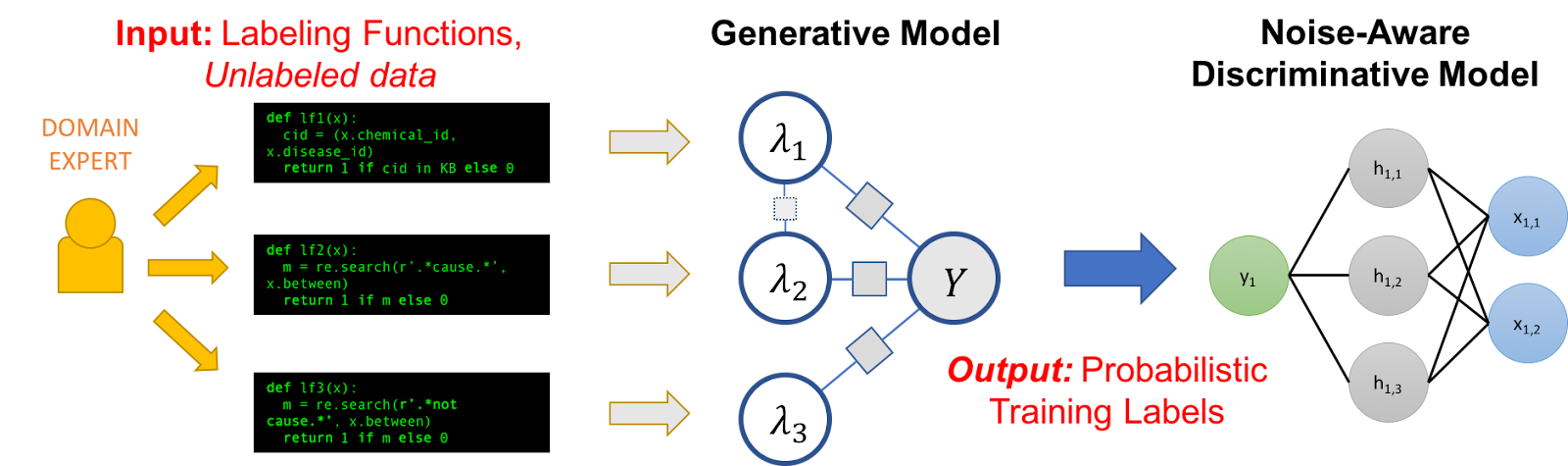

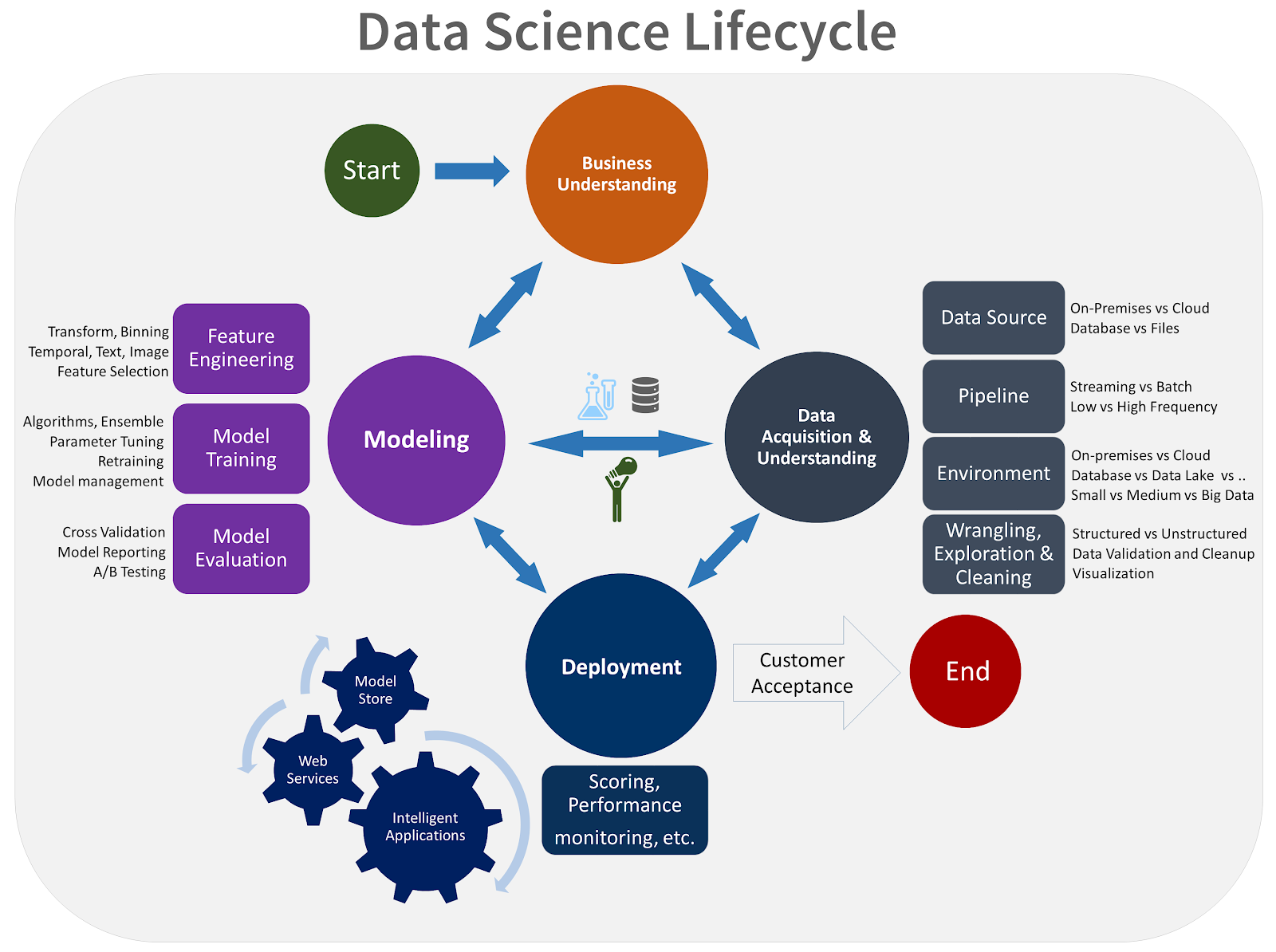

Typical steps of a machine-learning process. Mainstream machine-learning education and research focuses on the modeling steps itself with provided datasets.

Comparatively little attention is paid to how data is collected and labeled. Similarly, little attention is usually paid to how the learned models might actually be used for a real task. Rarely is there any discussion of the larger system that might produce the data or use the model’s predictions. Many researchers and practitioners have expressed frustrations with this somewhat narrow focus on model training due to various incentives in the research culture, such as Wagstaff’s 2012 essay “Machine Learning that Matters” and Sambasivan et al.’s 2021 study “Everyone wants to do the model work, not the data work.” Outside of BigTech organizations with lots of experience, this also leaves machine-learning practitioners with little guidance when they want to turn models into products, as can often be observed in many struggling teams and failing start-ups.

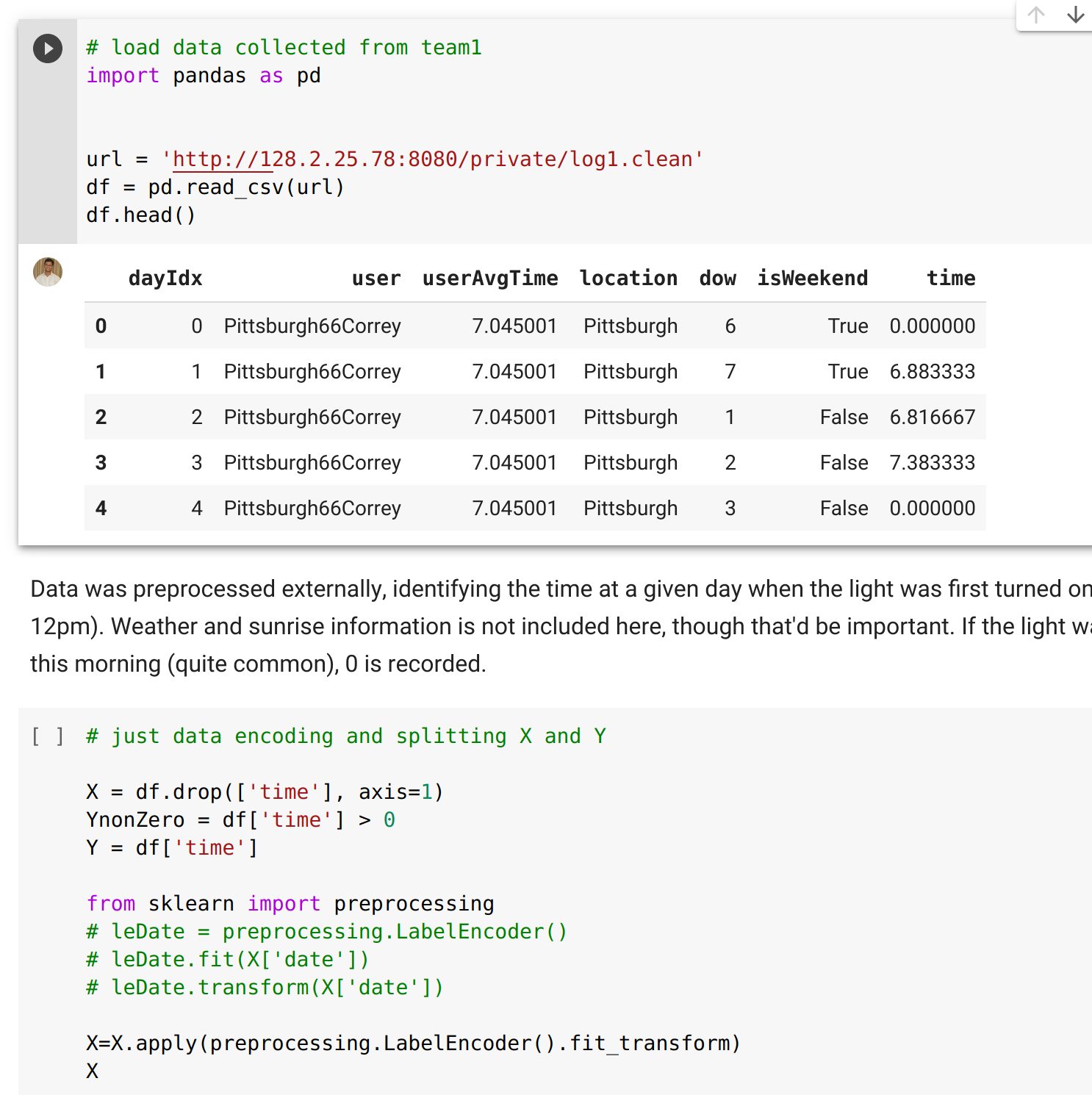

Automating Pipelines and MLOps (ML Engineering)

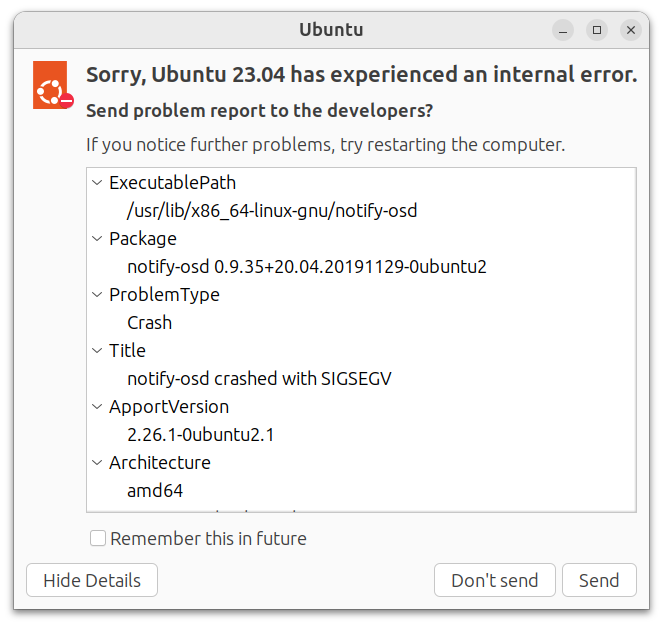

With the increasing use of machine learning in production systems, engineers have noticed various practical problems in deploying and maintaining machine-learned models. Traditionally, models might be learned in a notebook or with some script, then serialized (“pickled”), and then embedded in a web server that provides an API for making predictions. This seems easy enough at first, but real-world systems become complex quickly.

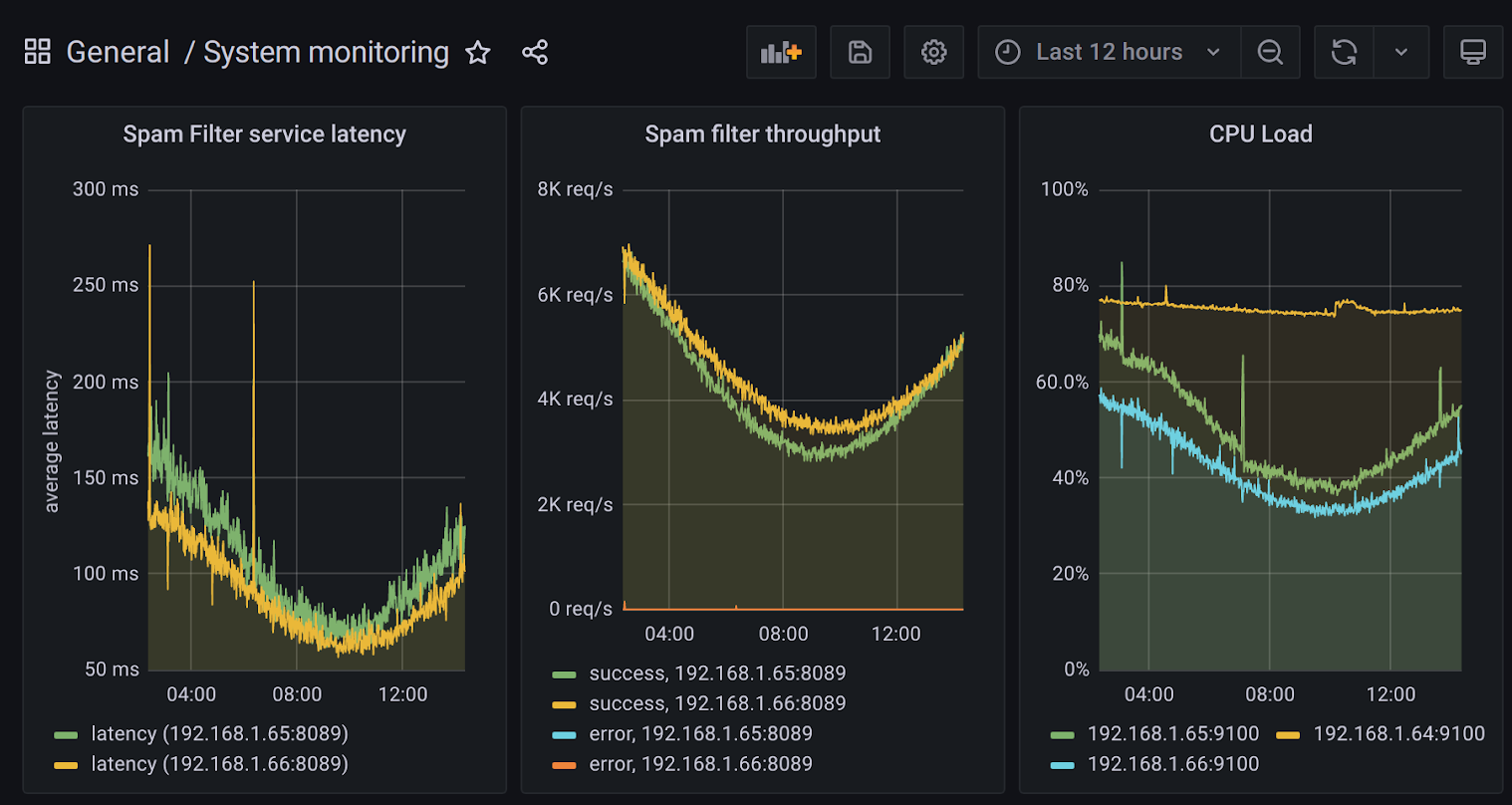

When used in production systems, scaling the system with changing demand becomes increasingly important, often using cloud infrastructure. To operate the system in production, we might want to monitor service quality in real time. Similarly, with very large datasets, model training and updating can become challenging to scale. Manual steps in learning and deploying models become tedious and error-prone when models need to be updated regularly, either due to continuous experimentation and improvement or due to routine updates to handle various forms of distribution shifts. Experimental data science code is often derided as being of low quality by software engineering standards, often monolithic, with minimal error handling, and barely tested—which is not fostering confidence in regular or automated deployments.

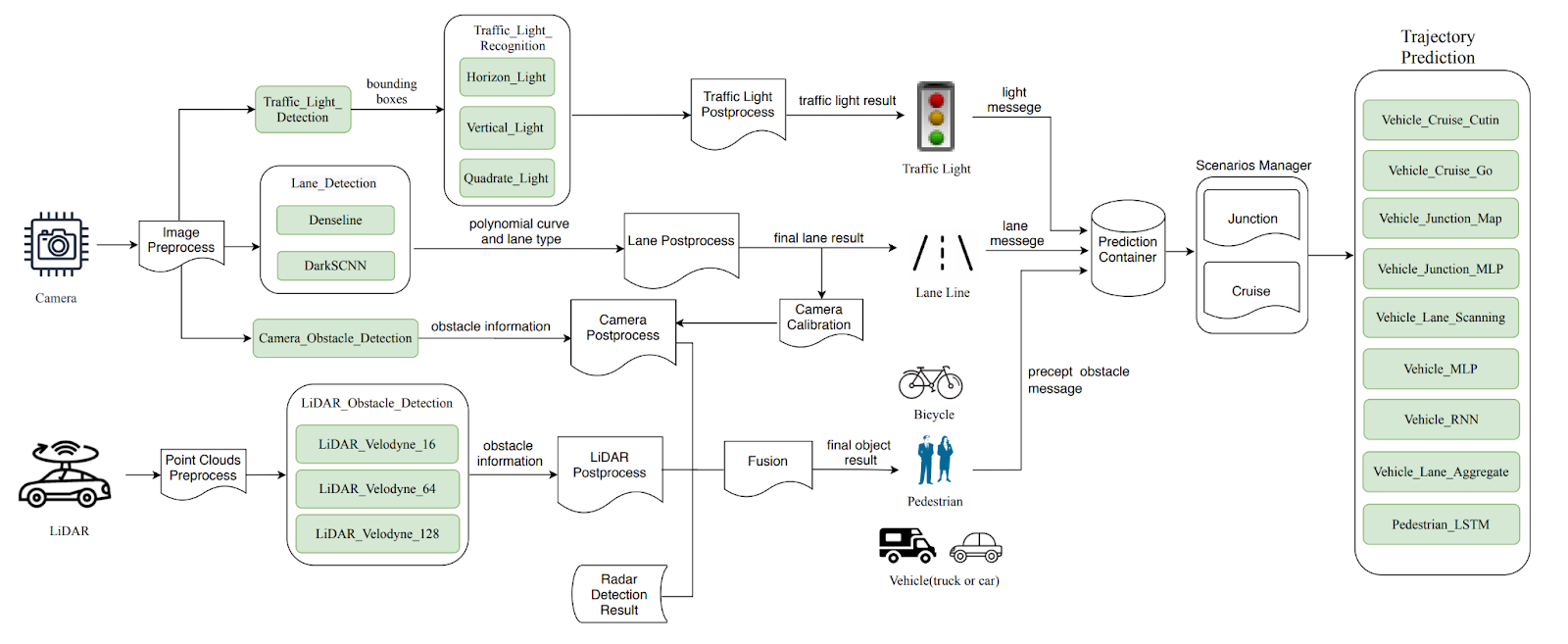

Machine-learning practitioners working on production systems increasingly widen their focus from modeling to the entire ML pipeline, including deployment and monitoring, with a heavy focus on automation.

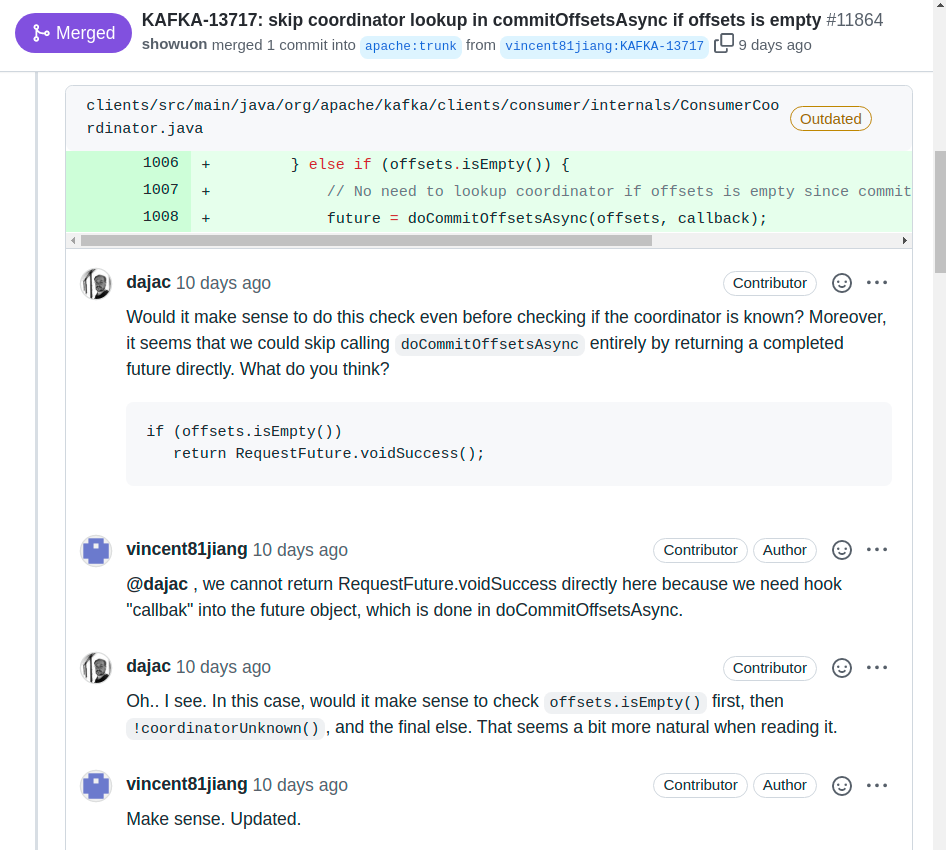

All this has put increasing attention on distributed training, deployment, quality assurance, and monitoring, supported with automation of machine-learning pipelines, often under the label MLOps. Recent tools automate many common tasks of wrapping models into scalable web services, regularly updating them, and monitoring their execution. Increasing attention is paid to scalability achieved in model training and model serving through massive parallelization in cloud environments. While many teams originally implemented this kind of infrastructure for each project and maintained substantial amounts of infrastructure code, as described prominently in the well-known 2015 technical debt article from a Google team, nowadays many competing open-source MLOps tools and commercial MLOps solutions exist.

The amount of code for actual model training is comparably small compared to lots of infrastructure code needed to automate model training, serving, and monitoring. These days, much of this infrastructure is readily available through off-the-shelf MLOps tools. Figure from 🗎 Sculley, David, Gary Holt, Daniel Golovin, Eugene Davydov, Todd Phillips, Dietmar Ebner, Vinay Chaudhary, Michael Young, Jean-Francois Crespo, and Dan Dennison. “Hidden Technical Debt in Machine Learning Systems.” In Advances in Neural Information Processing Systems, pp. 2503–2511. 2015.

In recent years, also the nature of machine-learning pipelines has changed in some projects. Foundation models have introduced a shift from training custom models toward prompting large general-purpose models, often trained and controlled by external organizations. Increasingly, multiple models and prompts are chained together to perform tasks, and multiple ML and non-ML components together can perform sophisticated tasks, such as combining search and generative modes in retrieval-augmented generation. This increasing complexity in machine-learning pipelines is supported by all kinds of emerging tooling.

Researchers and consultants report that shifting a team's mindset from models to machine-learning pipelines is challenging. Data scientists are often used to working with private datasets and local workspaces (e.g., in computational notebooks) to create models. Migrating code toward an automated machine-learning pipeline, where each step is automated and tested, requires a substantial shift of mindsets and a strong engineering focus. This engineering focus is not necessarily valued by all team members; for example, data scientists frequently report resenting having to do too much infrastructure work and how it prevents them from focusing on their models. At the same time, many data scientists eventually appreciate the additional benefits of being able to experiment more rapidly in production and deploy improved models with confidence and at scale.

ML-Enabled Software Products

Notwithstanding the increased focus on automation and engineering of machine-learning pipelines, this pipeline view and MLOps are entirely model-centric. The pipeline starts with model requirements and ends with deploying the model as a reliable and scalable service, but it does not consider other parts of the system, how the model interacts with those, or where the model requirements come from. Zooming out, the entire purpose of the machine-learning pipeline is to create a model that will be used as one component of a larger system, potentially with additional components for training and monitoring the model.

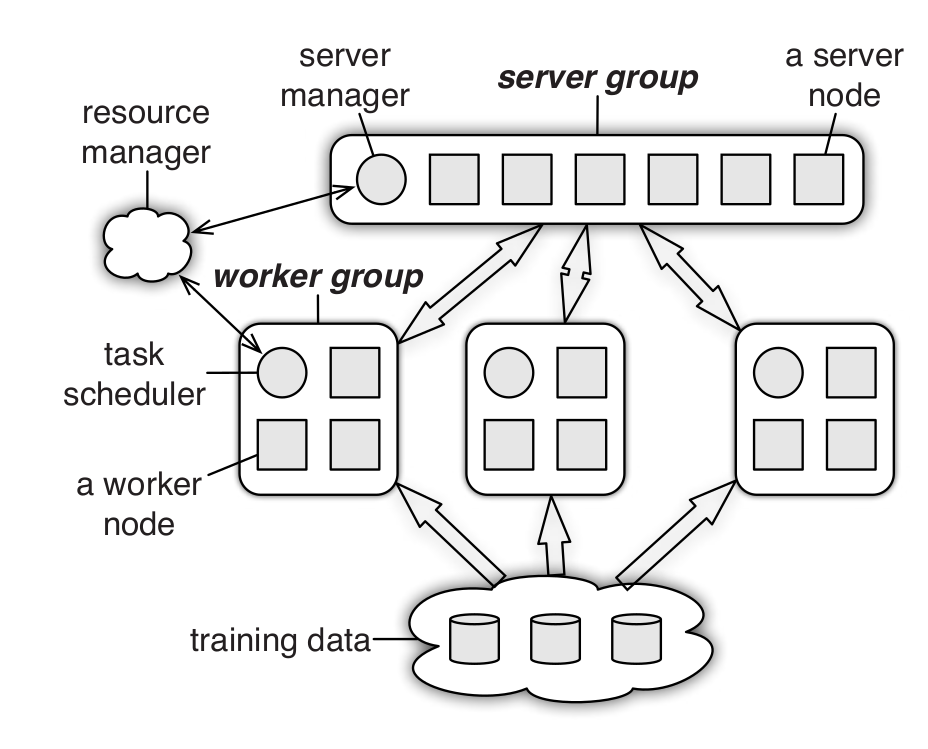

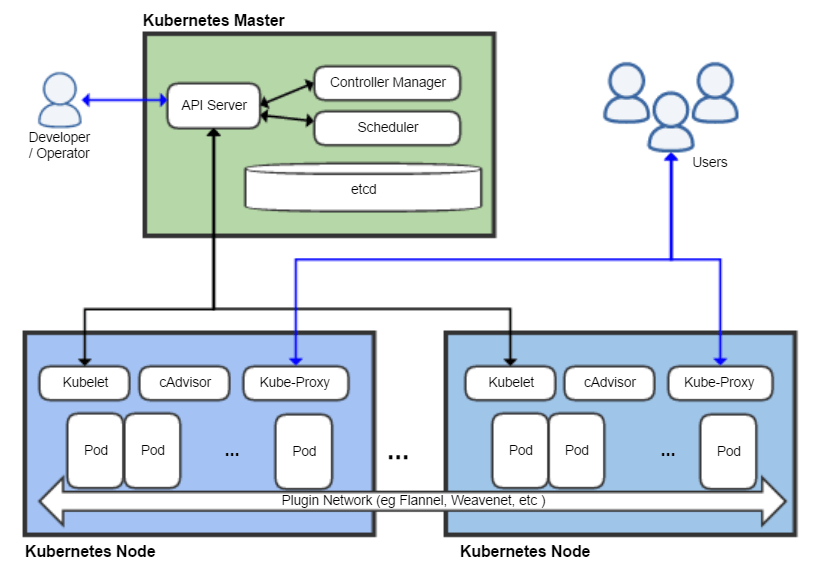

The ML pipeline corresponds to all activities for producing, deploying, and updating the ML component. The resulting ML component is part of a larger system.

As we will discuss throughout this book, key challenges of building production systems with machine-learning components arise at the interface between these ML components and non-ML components within the system and how they, together, form the system behavior as it interacts with the environment and pursues the system goals. There is a constant tension between the goals and requirements of the overall system and the requirements and design of individual ML and non-ML components: Requirements for the entire system influence model requirements as well as requirements for model monitoring and pipeline automation. For example, in the transcription scenario, user-interface designers may suggest model requirements to produce confidence scores for individual words and alternative plausible transcriptions to provide a better user experience; operators may suggest model requirements for latency and memory demand during inference. Such model requirements originating from the needs of how the model is integrated into a system may constrain data scientists in what models they can choose. Conversely, the capabilities of the model influence the design of non-ML parts of the system and guide what assurances we can make about the entire system. For example, in the transcription scenario, the accuracy of predictions may influence the user-interface design and to what degree humans must be kept in the loop to check and fix the automatically generated transcripts; inference costs set bounds on what prices are needed to cover operating costs, shaping possible business models. In general, model capabilities shape what system quality we can promise customers.

Systems Thinking

Given how machine learning is part of a larger system, it is important to pay attention to the entire system, not just the machine-learning components. We need a system-wide approach with an interdisciplinary team that involves all stakeholders.

Systems thinking is the name for a discipline that focuses on how systems interact with the environment and how components within the system interact. For example, Donella Meadows defines a system as “a set of inter-related components that work together in a particular environment to perform whatever functions are required to achieve the system's objective.” System thinking postulates that everything is interconnected, that combining parts often leads to new emergent behaviors that are not apparent from the parts themselves, and that it is essential to understand the dynamics of a system in an environment where actions have effects and may form feedback loops.

A system consists of components working together toward the system goal. The system is situated in and interacts with the environment.

As we will explore throughout this book, many common challenges in building production systems with machine-learning components are really system challenges that require understanding the interaction of ML and non-ML components and the interaction of the system with the environment.

Beyond the Model

A model-centric view of machine learning allows data scientists to focus on the hard problems involved in training more accurate models and allows MLOps engineers to build an infrastructure that enables rapid experimentation and improvement in production. However, the common model-centric view of machine learning misses many relevant facets of building high-quality products using those models.

System Quality versus Model Quality

Outside of machine-learning education and research, model accuracy is almost never a goal in itself, but a means to support the goal of a system or a goal of the organization building the system. A common system goal is to satisfy some user needs, often grounded in an organizational goal of making money (we will discuss this in more detail in chapter Setting and Measuring Goals). The accuracy of a machine-learned model can directly or indirectly support such a system goal. For example, a better audio transcription model is more useful to users and might attract more users paying for transcriptions, and predicting the audit risk in tax software may provide value to the users and may encourage sales of additional services. In both cases, the system goal is distinct from the model’s accuracy but supported more or less directly by it.

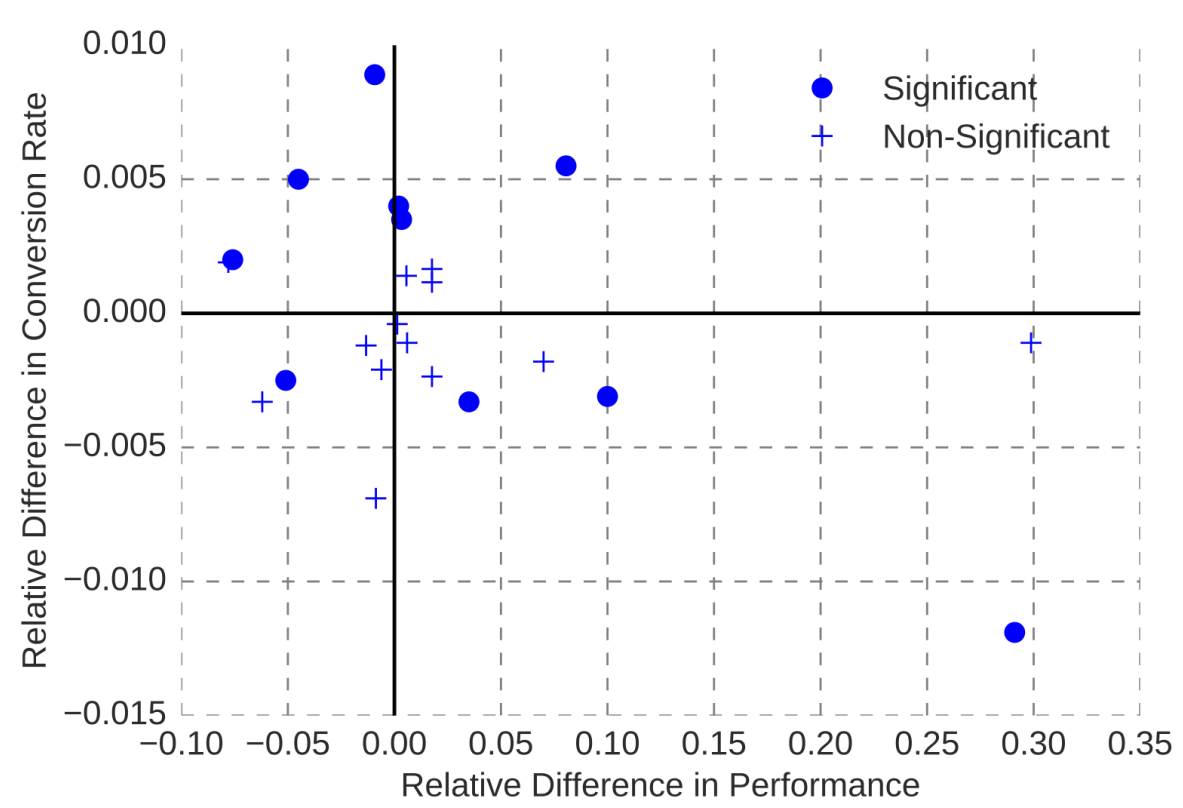

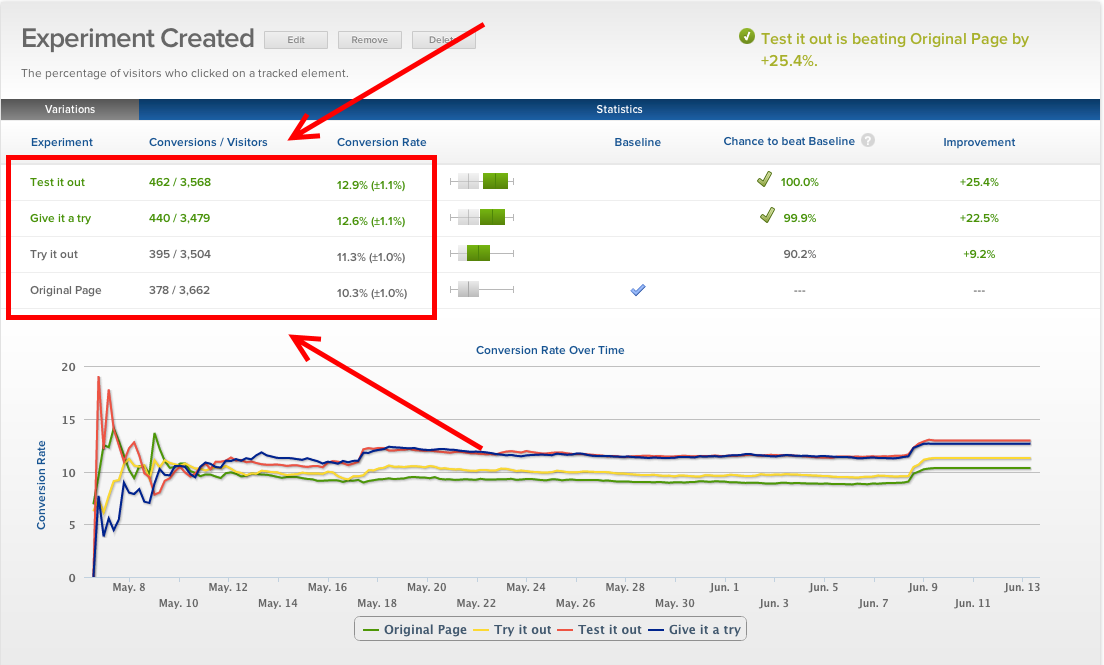

Interestingly, improvements in model accuracy do not even have to translate to improvements in system goals. For example, experience at the hotel booking site Booking.com has shown that improving the accuracy of models predicting different aspects of a customer’s travel preferences used for guiding hotel suggestions does not necessarily improve hotel sales—and in some cases, improved accuracy even impacted sales negatively. It is not always clear why this happens, as there is no direct causal link between model accuracy and sales. One possible explanation for such observations offered by the team was that the model becomes too good up to a point where it becomes creepy: it seems to know too much about a customer’s travel plans when they have not actively shared that information. In the end, more accurate models were not adopted if they did not contribute to overall system improvement.

Observations from online experiments at Booking.com show that model accuracy improvement (“Relative Difference in Performance”) does not necessarily translate to improvements in system outcomes (“Conversion Rate”). From Bernardi et al. “150 Successful Machine Learning Models: 6 Lessons Learned at Booking.com.” In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, 2019.

Accurate predictions are important for many uses of machine learning in production systems, but it is not always the most important priority. Accurate predictions may not be critical for the system goal and “good enough” predictions may actually be good enough. For example, for the audit prediction feature in the tax system, roughly approximating the audit risk is likely sufficient for many users and for the software vendor who might try to upsell users on additional services or insurance. In other cases, marginally better model accuracy may come at excessive costs, for example, from acquiring or labeling more data, from longer training times, and from privacy concerns—a simpler, cheaper, less accurate model might often be preferable considering the entire system. Finally, other parts of the system can mitigate many problems from inaccurate predictions, such as a better user interface that explains predictions to users, mechanisms for humans to correct mistakes, or system-level non-ML safety features (see chapter Planning for Mistakes). Those design considerations beyond the model may make a much more important contribution to system quality than a small improvement in model accuracy.

A narrow focus only on model accuracy that ignores how the model interacts with the rest of the system will miss opportunities to design the model to better support the overall system goals and balance various desired qualities.

Predictions have Consequences

Most software systems, including those with machine-learning components, interact with the environment. They aim to influence how people behave or directly control physical devices, such as sidewalk delivery robots and autonomous trains. As such, predictions can and should have consequences in the real world, positive as well as negative. Reasoning about interactions between the software and the environment outside of the software (including humans) is a system-level concern and cannot be done by reasoning about the software or the machine-learned component alone.

From a software-engineering perspective, it is prudent to consider every machine-learned model as an unreliable function within a system that sometimes will return unexpected results. The way we train models by fitting a function to match observations rather than providing specifications makes mistakes unavoidable (it is not even clear that we can always clearly determine what constitutes a mistake). Since we must accept eventual mistakes, it is up to other parts of the system, including user interaction design or safety mechanisms, to compensate for such mistakes.

A smart toaster may occasionally burn some toast, but it shall never burn down the entire kitchen. [Online-only figure.]

Consider Geoff Hulten’s example of a smart toaster that uses sensors and machine learning to decide how long to toast some bread, achieving consistent outcomes to the desired level of toastedness. As with all machine-learned models, we should anticipate eventual mistakes and the consequences of those mistakes on the system and the environment. Even a highly accurate machine-learned model may eventually suggest toasting times that would burn the toast or even start a kitchen fire. While the software is not unsafe itself, the way it actuates the heating element of the toaster could be a safety hazard. More training data and better machine-learning techniques may make the model more accurate and robust and reduce the rate of mistakes, but it will not eliminate mistakes entirely. Hence, the system designer should look at means to make the system safe even despite the unreliable machine-learning component. For the toaster, safety mechanisms could include (1) non-ML code to cap toasting time at a maximum duration, (2) an additional non-ML component using a temperature sensor to stop toasting when the sensor readings exceed a threshold, or (3) installing a thermal fuse (a cheap hardware component that interrupts power when it overheats) as a non-ML, non-software safety mechanism. With these safety mechanisms, the toaster may still occasionally burn some toast when the machine-learned model makes mistakes, but it will not burn down the kitchen.

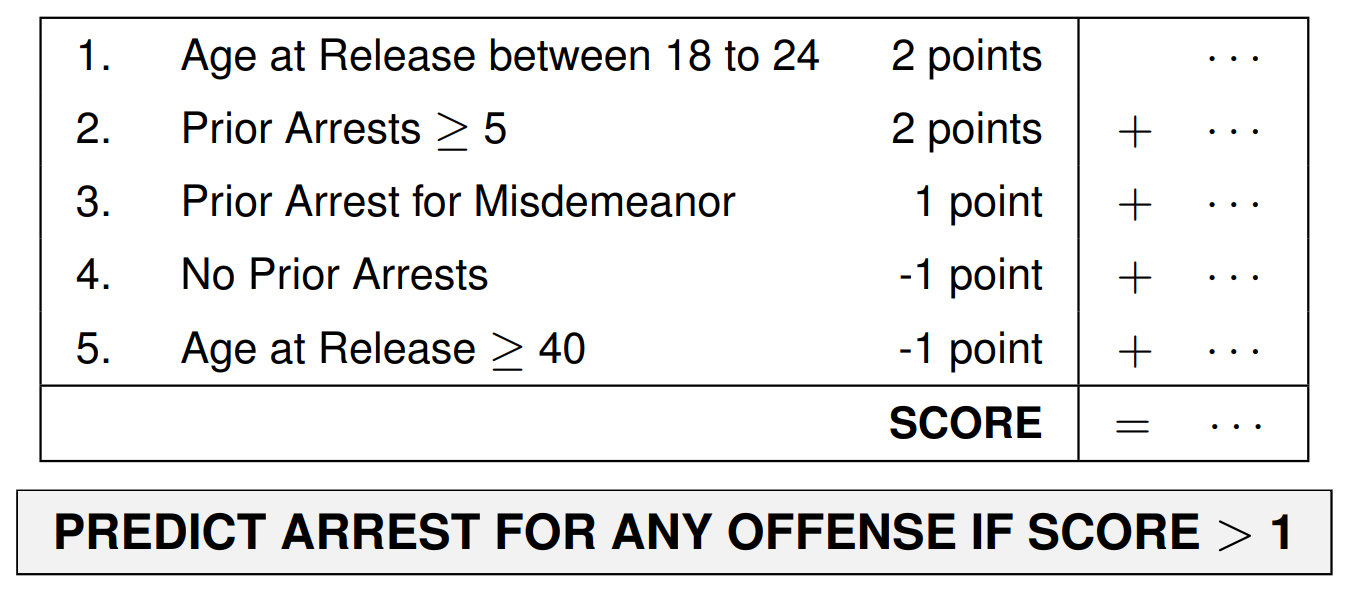

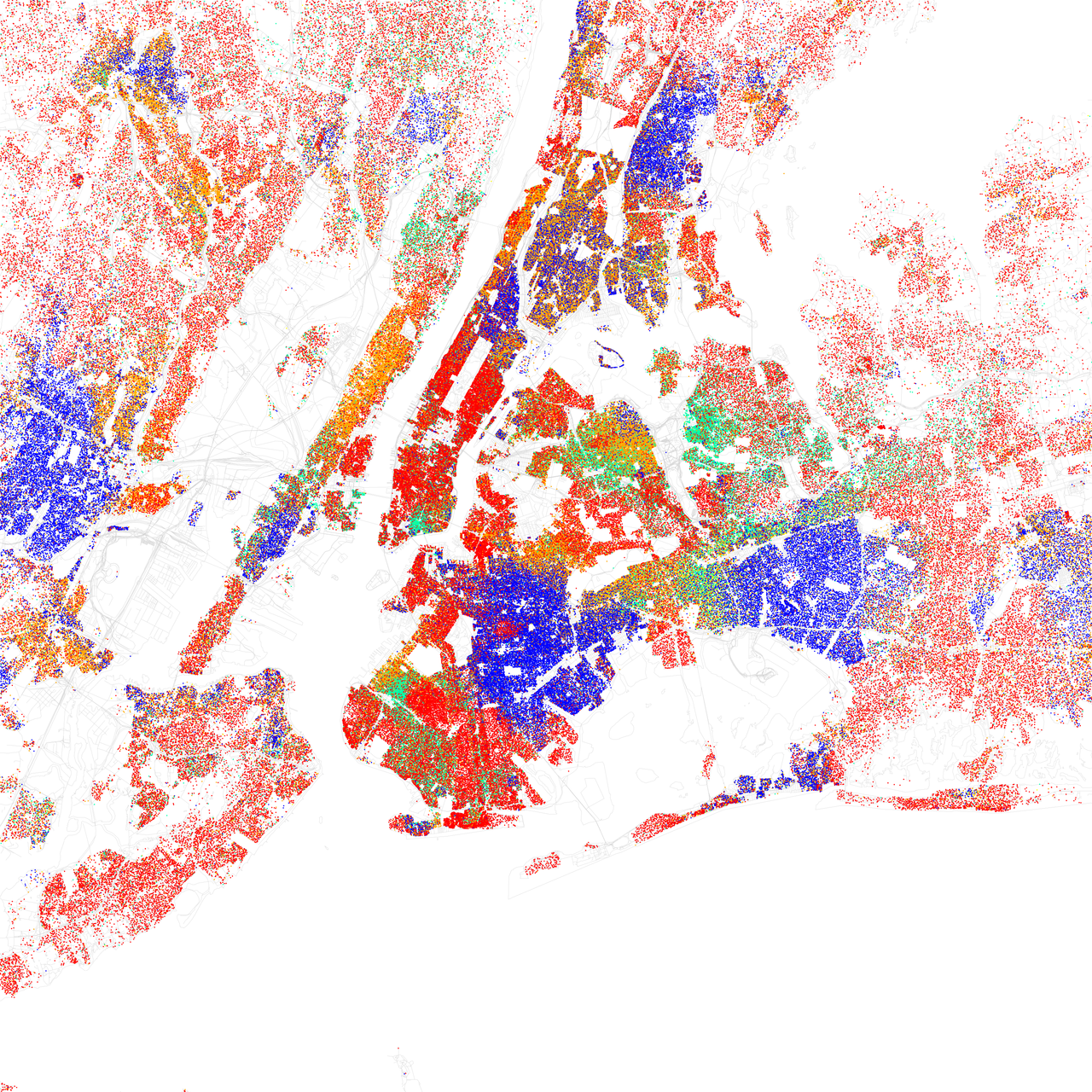

Consequences of predictions also manifest in feedback loops. As people react to a system’s predictions at scale, we may observe that predictions of the model reinforce or amplify behavior the models initially learned, thus producing more data to support the model’s predictions. Feedback loops can be positive, such as in public health campaigns against smoking when people adjust behavior in intended ways, providing role models for others and more data to support the intervention. However, many feedback loops are negative, reinforcing bias and bad outcomes. For example, a system predicting more crime in an area overpoliced due to historical bias may lead to more policing and more arrests in that area—this then provides additional training data reinforcing the discriminatory prediction in future versions of the model even if the area does not have more crime than others. Understanding feedback loops requires reasoning about the entire system and how it interacts with the environment.

Just as safety is a system-level property that requires understanding how the software interacts with the environment, so are many other qualities of interest, including security, privacy, fairness, accountability, energy consumption, and user satisfaction. In the machine-learning community, many of these qualities are now discussed under the umbrella of responsible AI. A model-centric view that focuses only on analyzing a machine-learned model without considering how it is used in a system cannot make any assurances about system-level qualities such as safety and will have difficulty anticipating feedback loops. Responsible engineering requires a system-level approach.

Human-AI Interaction Design

System designers have powerful tools to shape the system through user interaction design. A key question is whether and how to keep humans in the loop in the system. Some systems aim to fully automate a task, by making automated decisions and automatically actuating effects in the real world. However, often we want to keep humans in the loop to give them agency by either asking them up front whether to take an action or by giving them a path to appeal or reverse an automated decision. For example, the audit risk prediction in the tax software example prompts users with a question of whether to buy audit insurance whereas an automated fraud-detection system on an auction website likely should automatically take down problematic content but provide a path to appeal.

Well-designed systems can appear magically and simply work, releasing humans from tedious and repetitive tasks, while still providing space for oversight and human agency. But as models can make mistakes, automation can lead to frustration, dangerous situations, and harm. Also, humans are generally not good at monitoring systems and may have no chance of checking model predictions even if they tried. Furthermore, machine learning can be used in dark ways to manipulate humans in subtle ways, persuading them to take actions that are not in their best interest. All this places a heavy burden on responsible system design, considering not only whether a model is accurate but also how it is used for automation or to interact with users.

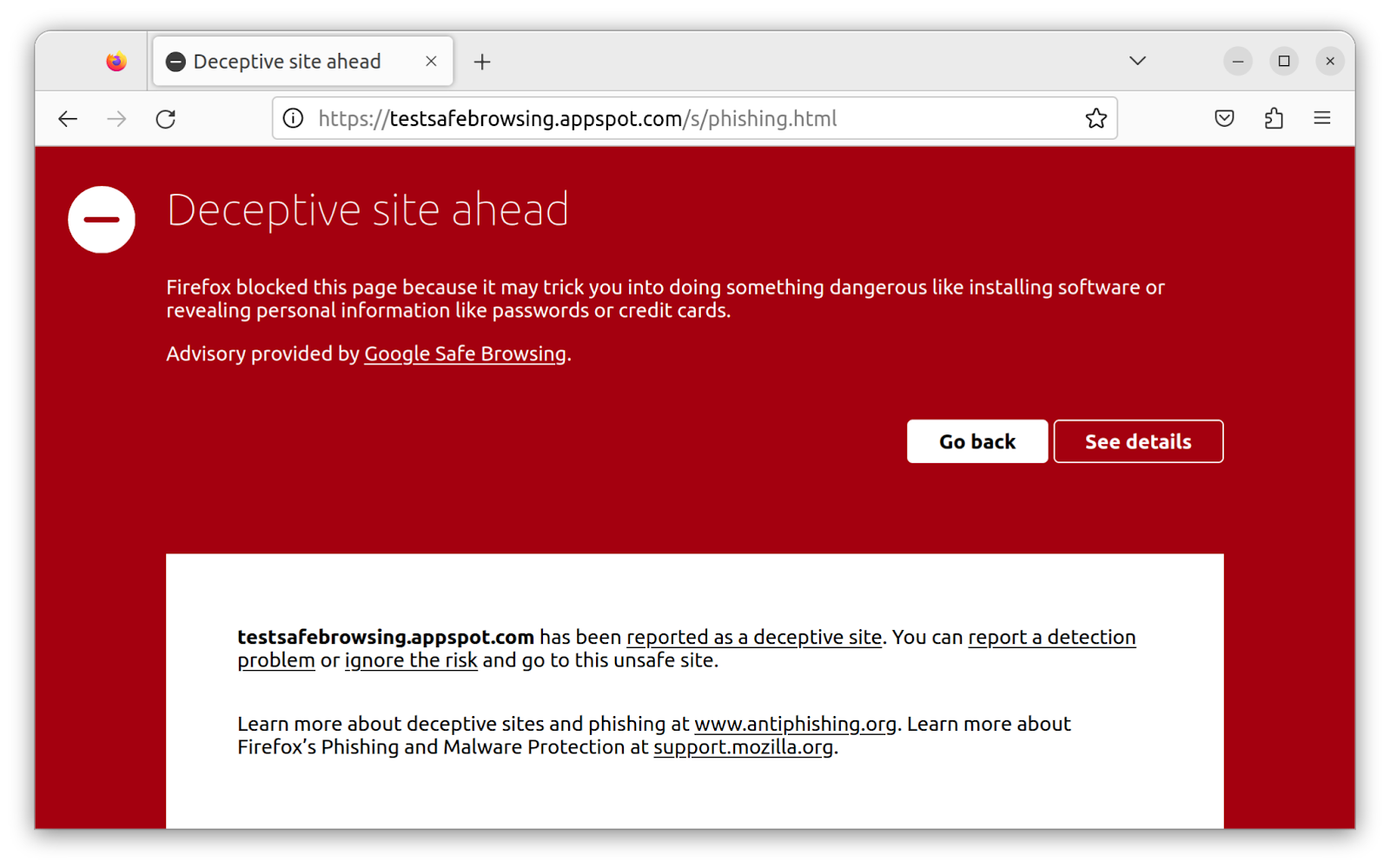

A smart safe browsing feature uses machine learning to warn of malicious websites. The list of sites may or may not be reviewed by experts. The end-user interaction is designed to automatically stop the user from reaching the pack, who still can deliberately override the warning though. [Online-only figure.]

A model-centric approach without considering the rest of the system misses many important decisions and opportunities for user interaction design that build trust, provide safeguards, and ensure the autonomy and dignity of people affected by the system. Model qualities, including its accuracy and its ability to report confidence or explanations, shape possible and necessary user interface design decisions; conversely, user design considerations may influence model requirements.

Data Acquisition and Anticipating Change

A model-centric view often assumes that data is given and representative, even though system designers often have substantial flexibility in deciding what data to collect and how. Furthermore, in contrast to the fixed datasets in course projects and competitions, data distributions are rarely stable over time in production.

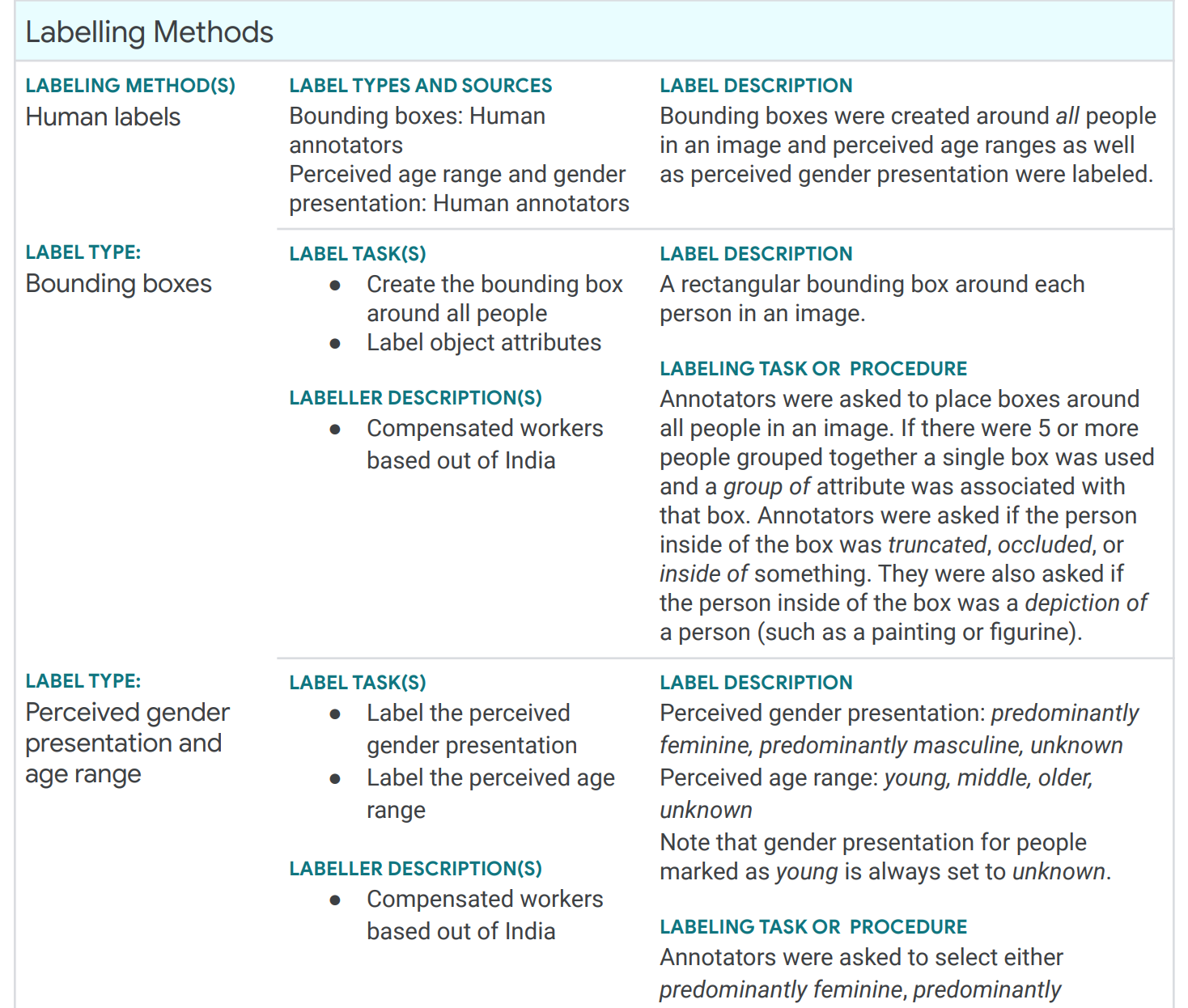

Compared to feature engineering and modeling and even deployment and monitoring, data collection and data quality work is often undervalued. Data collection must be an integral part of system-level planning. This is far from trivial and includes many considerations, such as educating the people collecting and entering data, training labelers and assuring label quality, planning data collection up front and future updates of data, documenting data quality standards, considering cost, setting incentives, and establishing accountability.

It can be very difficult to acquire representative and generalizable training data in the first place. In addition, data distributions can drift as the world changes (see chapter Data Quality), leading to outdated models that perform increasingly worse over time unless updated with fresh data. For example, the tax software may need to react when the government changes its strategy for whom it audits, especially after certain tax evasion strategies become popular; the transcription service must update its model regularly to support new names that recently started to occur in recorded conversations, such as names of new products and politicians. Anticipating the need to continuously collect training data and evaluate model and system quality in production will allow developers to prepare a system proactively.

System design, especially the design of user interfaces, can substantially influence what data is generated by the system. For example, the provider of the tax software may provide a free service to navigate a tax audit to encourage users to report whether an audit happened, to then validate or improve the audit risk model. Even just observing users downloading old tax returns may provide some indication that an audit is happening, and the system could hide access to the return behind a pop-up dialog asking whether the user is being audited. In the transcription scenario, providing an attractive user interface to show and edit transcription results could allow us to observe how users change the transcript, thereby providing insights about probable transcription mistakes; more invasively, we could directly ask users which of multiple possible transcriptions for specific audio snippets is correct. All these designs could potentially be used as a proxy to measure model quality and also to collect additional labeled training data from observing user interactions.

Again, a focus on the entire system rather than a model-centric focus encourages a more holistic view of aspects of data collection and encourages design for change, preparing the entire system for constant updates and experimentation.

Interdisciplinary Teams

In the introduction, we already argued how building products with machine-learning components requires a wide range of skills, typically by bringing together team members with different specialties. Taking a system-wide view reinforces this notion further: machine-learning expertise alone is not sufficient, and even engineering skills and MLOps expertise to build machine-learning pipelines and deploy models cover only small parts of a larger system. At the same time, software engineers need to understand the basics of machine learning to understand how to integrate machine-learned components and plan for mistakes. When considering how the model interacts with the rest of the system and how the system interacts with the environment, we need to bring together diverse skills. For collaboration and communication in these teams, machine-learning literacy is important, but so is understanding system-level concerns like user needs, safety, and fairness.

On Terminology

Unfortunately, there is no standard term for referring to building software products with machine-learning components. In this quickly evolving field, there are many terms, and those terms are not used consistently. In this book, we adopt the term “ML-enabled product” or simply the descriptive “software product with machine-learning components” to emphasize the broad focus on an entire system for a specific purpose, in contrast to a more narrow model-centric focus of data science education or MLOps pipelines. The terms “ML-enabled system,” “ML-infused system,” and “ML-based system” have been used with similar intentions.

In this book, we talk about machine learning and usually focus on supervised learning. Technically, machine learning is a subfield of artificial intelligence, where machine learning refers to systems that learn functions from data. There are many other artificial intelligence approaches that do not involve machine learning, such as constraint satisfaction solvers, expert systems, and probabilistic programming, but many of them do not share the same challenges arising from missing specifications of machine-learned models. In colloquial conversation and media, machine learning and artificial intelligence are used largely interchangeably and AI is the favored term among public speakers, media, and politicians. For most terms discussed here, there is also a version that uses AI instead of ML, for example, “AI-enabled system.”

The software-engineering community sometimes distinguishes between “Software Engineering for Machine Learning” (short SE4ML, SE4AI, SEAI) and “Machine Learning for Software Engineering” (short ML4SE, AI4SE). The former refers to applying and tailoring software-engineering approaches to problems related to machine learning, which includes challenges of building ML-enabled systems as well as more model-centric challenges like testing machine-learned models in isolation. The latter refers to using machine learning to improve software-engineering tools, such as using machine learning to detect bugs in code or to automatically generate code. While software-engineering tools with machine-learned components technically are ML-enabled products too, they are not necessarily representative of the typical end-user-focused products discussed in this book, such as transcription services or tax software.

The term “AI Engineering” and the job title of an “ML engineer” are gaining popularity to highlight a stronger focus on engineering in machine-learning projects. They most commonly refer to building automated pipelines, deploying models, and MLOps and, hence, tend to skew model-centric rather than system-wide, though some people use the terms with a broader meaning. The terms ML System Engineering and SysML (and sometimes AI Engineering) refer to the engineering of infrastructure for machine learning, such as building efficient distributed learning algorithms and scalable inference services. Data engineering usually refers to roles focused on data management and data infrastructure at scale, typically deeply rooted in database and distributed-systems skills.

To further complicate terminology, ModelOps, AIOps, and DataOps have been suggested and are distinct from MLOps. ModelOps is a business-focused rebrand of MLOps, tracking how various models fit into an enterprise strategy. AIOps tends to refer to the use of artificial intelligence techniques (including machine learning and AI planning) during the operation of software systems, for example, to automatically scale cloud resources based on predicted demand. DataOps refers to agile methods and automation in business data analytics.

Summary